Eventual consistency for duplicated data

Changes list

| Mapped WBS to Jira tickets | |

| Raman Auramau | Review proposal together with dev team basing on recent clarifications |

| Raman Auramau | 65115193 added |

| Raman Auramau | Review with Thunderjet team, provide estimates for work items |

| Raman Auramau | Add sequence diagrams for event producer and event consumer |

| Raman Auramau | Initial document |

Introduction

Analysis of Data consistency issues in FOLIO Acq & Finance allowed to granulate known issues into several categories, one of which is consistency for duplicated data.

Brief context: a source module owns an entity; a particular entity field is duplicated into 1+ entities of another module (e.g., for search, or filtering, or sorting). If an original field value in source module is changed, the change is to be replicated everywhere.

Several consistency models can be considered to address this type of issues though this particular proposal is based on eventual consistency pattern. Very briefly, eventual consistency means that if the system is functioning and we wait long enough after any given set of inputs, we will eventually be able to know what the state of the database is, and so any further reads will be consistent with our expectations changes are guaranteed to be applied but with a possible delay (asynchronously).

The FOLIO platform has no wide experience in implementing and applying this approach. That's why this particular proposal is aimed to make a high-level and low-level design basing on this approach but scope it to a particular use case - UIREC-148 - Getting issue details... STATUS (separated from UIREC-135 - Getting issue details... STATUS ) :

https://issues.folio.org/browse/UIREC-148

Scenario: Data consistency

- Given piece is connected to item record

- When editing or creating piece

- Then Enumeration and Chronology fields update the corresponding fields in the item record

- AND if they are edited in inventory item record the corresponding fields are updated in the piece

Once designed, implemented and delivered, this result is to be reviewed to confirm if it can be considered as FOLIO-wide pattern for addressing similar issues with consistency.

Main pillars

The main pillars list the very basic principles of this solution:

- Every use case allows to identify the single source of truth

- Guaranteed Eventual consistency but not atomic consistency

- Low coupling between modules

Design

High-level design

On high level this proposal is based on domain event pattern widely used in distributed systems. According to this pattern a service (or module, or app) emits so called domain events when domain objects/data changed, and publishes these domain events to a notification channel so that they can be consumed by other services. So, the solution consists of the following parts -

- event producer,

- notification channel, and

- event consumer.

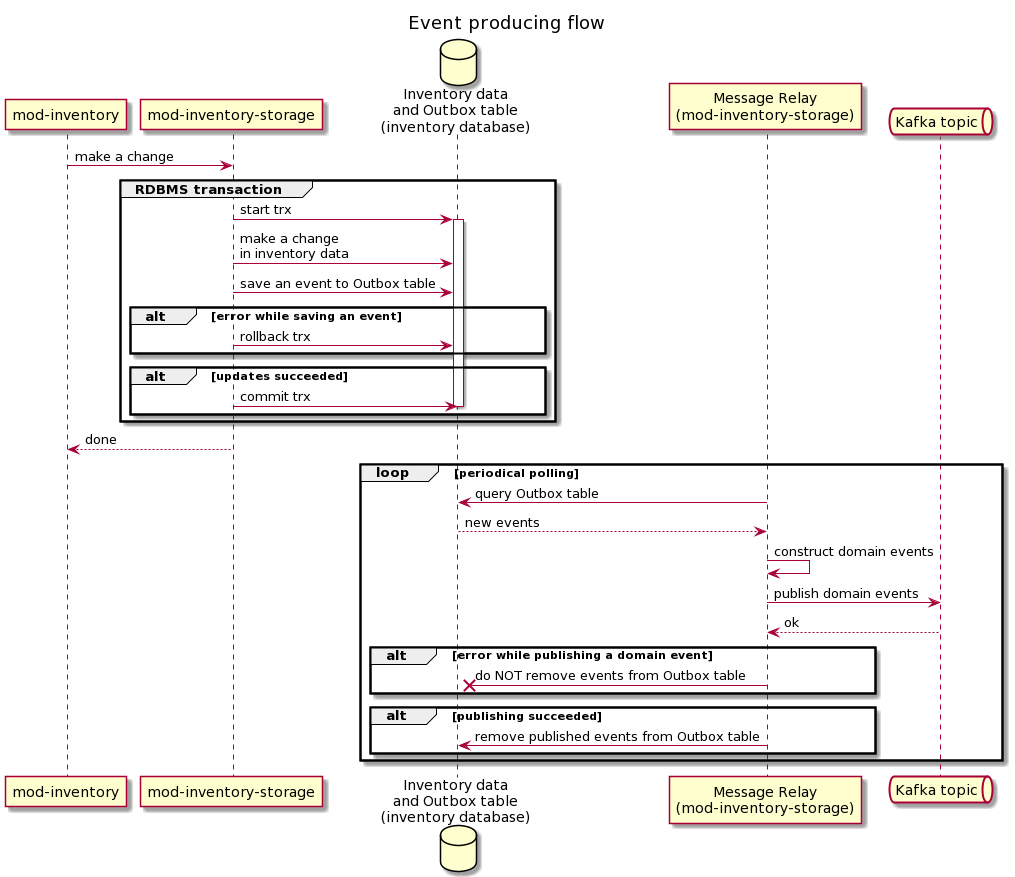

The responsibility of event producer is to ensure that any change in its data (when a domain object is created, updated or deleted) emits a dedicated domain event (containing information required to identify at least source module, changed object, and happened event). Also it has to guarantee that all emitted domain events are published to a notification channel. Note that event producer does not send domain events to a particular consumer; it just publishes events to a channel where they can be consumed by any consumers. Technically, every FOLIO module acting as a domain event source is to have event producer logic.

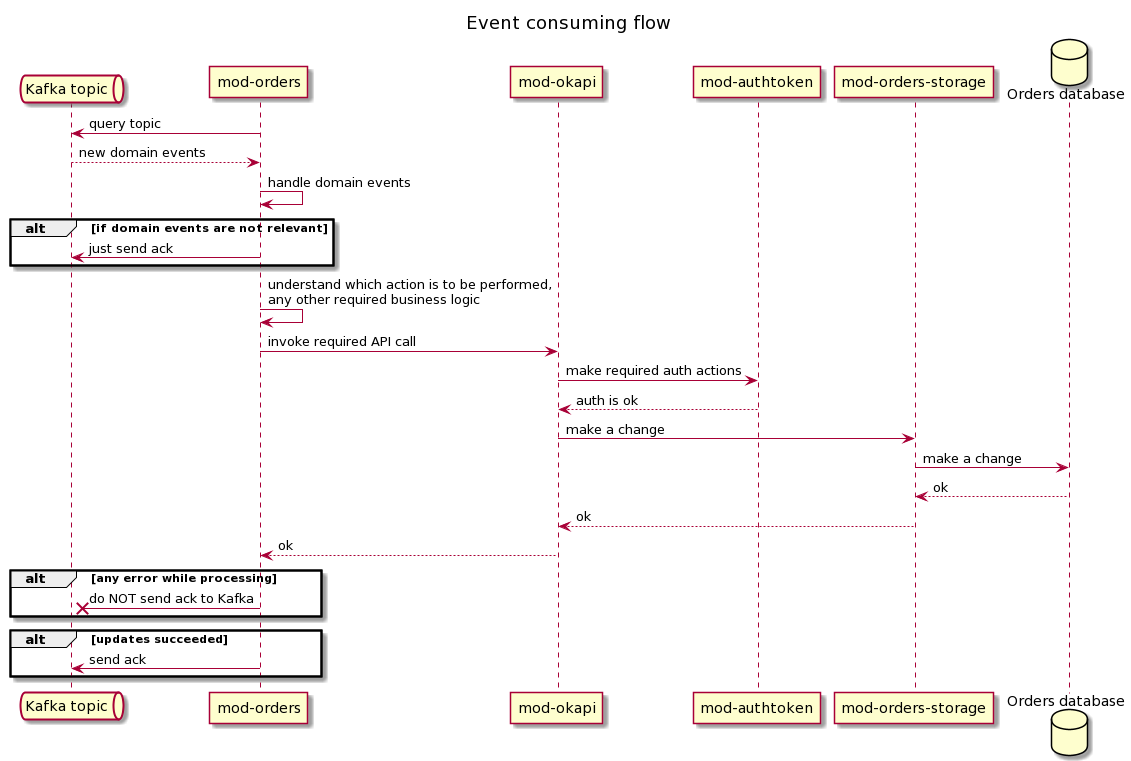

In turn, event consumer is responsible for reading domain events from notification channel and process them according to own business logic. From perspective of eventual consistency, event consumer is to acknowledge event consuming only after full processing on its side (including updating of database). Every FOLIO module acting as a destination is to have event consumer logic.

Notification channel acts in between event producer and event consumer. There should be a general notification delivery channel for transferring domain data event from modules-sources to modules-recipients. The channel must have characteristics such as guaranteed delivery with at-least-one semantic, as well as data persistence and minimal latency. Direct Kafka approach is recommended in this solution since it provides mentioned characteristics.

So, a module-source should be able to track changes in data it owns, and publish such changes as a domain event to a specified Kafka topic. In turn, a module-recipient should be able to connect to a specified Kafka topic, consume domain events and handle them appropriately.

Since as per identified use cases at least several modules can act as a sources (or recipient) it makes sense to implement mentioned logic as a small library with as much common code as possible, and re-use in it each of current or further modules to decrease efforts and speed-up implementation.

Many modules-sources publish their domain events into Kafka topic aka FOLIO Domain Event Bus specifying source, event type (created, updated, moved, deleted etc.), unique identifier of changed record, other details if need. Modules-recipients connect to that topic with different consumer groups and process events. Please refer to Apache Kafka Messaging System for more details regarding Kafka best practices.

High-level diagram describing this proposal is shown below.

General provisions

- Event producer is to be located in a module acting as a single source of truth

- Event producer is to be located as close to data storage as possible

- If an application follows the pattern of splitting into 2 modules (business-logic + data-access) than event producer should be located in mod-*-storage

- Purpose is to reduce gap between a change in data storage and event publishing

- Transactional outbox pattern is required in event producer to reliably update the database and publish events

- Actually, this is a trade off between consistency and performance since outbox introduces additional delay (on polling interval maximum)

- Proper topic partitioning is to be configured

- Refer to Apache Kafka Messaging System#Topicpartitioning for more information

- Due to proper partitioning all domain events with the same instance_id go to one and the same partition which in turn support correct events ordering even in case of 1+ event consumers

- Event consumer can be located in either business-logic module or storage module

- No strict criteria though it makes more sense to locate it in business-logic module if additional processing logic is required (e.g., consume event, request additional information from storage module or other modules, make checks or verifications etc.) while straightforward logic (e.g., just field updating) can be located in storage module.

- Event consumer can read events in batches though inner processing is to be in series

- Commit of read messages to Kafka (acknowledgement) is to be performed after full processing only

- Auto-commit is not allowed

- Another option is transactional inbox

- The solution is to support at-least-one delivery, consequently event consumer is to be idempotent and be able to process repeated event

- Dead Letter Queue is to be configured in order to enable removing of broken or invalid events from main Kafka topic though leaving them for additional processing

- Configurable retries, Kafka events compaction etc. can be implemented here

- A client library for Direct Kafka is to be implemented and re-used

- Performance impact estimate

- The only place with potential performance impact is adding of a outbox implementation to event producer when additional data is to be written into an outbox table

- Delays estimate

- The delay between the moment of a change in the producer and the moment of receiving an event about it in the consumer consists of the following components

- outbox pattern and a period of polling an outbox table by a separate Message Relay process (say, 1 s)

- network delays while transmitting an event to and from Kafka

- a "polling" period in event consumer (say, 1 s)

- So, theoretically this can take up to a few seconds though higher delays can happen in practice due to real world circumstances

- The delay between the moment of a change in the producer and the moment of receiving an event about it in the consumer consists of the following components

Low-level design on the example of UIREC-148

Sequence diagramming

Below are sequence diagrams providing the runtime process details.

Work breakdown structure

![]() Note: need to be reviewed once again

Note: need to be reviewed once again

Below is the list of work items required to implement and deliver this solution.

| Task | Ticket | Estimation (SP) |

|---|---|---|

Analyze domain event pattern implementation in mod-inventory-storage and mod-search (or mod-remote-storage)

| MODORDERS-559 - Getting issue details... STATUS | 3 |

Refactor event producer in mod-inventory-storage

| 3 | |

Implement event consumer in mod-orders

| MODORDSTOR-242 - Getting issue details... STATUS | 2 |

Implement business logic (mod-orders) to handle a domain event

| MODORDERS-561 - Getting issue details... STATUS | 3 |

Simple DLQ (Dead Letter Queue) implementation. As temporary solution log wrong events (event contains errors)

| ||

| An user can see all the data but these Enumeration & Chronology fields are not editable | ||

| Update receiving history view | MODORDSTOR-240 - Getting issue details... STATUS | 2 |

| Conduct functional testing | ||

| Update documentation (as a part of SA work, not for dev team) |

- Note: if a new library for event producer / consumer will be created, it would make sense to ask other teams to integrate it into mod-search (team?) and mod-remote-storage (Firebird team)

Additional items

Single source of truth

The scenario mentioned in the very beginning says that "Given piece is connected to item record When editing or creating piece Then Enumeration and Chronology fields update the corresponding fields in the item record AND if they are edited in inventory item record the corresponding fields are updated in the piece".

In current wording it can be understood that bidirectional synchronization is required - from inventory to orders, and from orders to inventory. The fact is that explicit implementation of this understanding violates the principle of single source of truth and may lead (most likely, will lead) to endless loop of domain events.

To avoid that, only one module is to be considered as a single source of truth (in this particular case - inventory), and any changes in its data should be synchronized to another module (in this particular case - orders).

Please note that this limitation is on data level - it specifies who owns the data, and which module provides a platform API for data management.

This means that more than one UI form still can work with this data.

Quick notes during the meeting

A piece can be related to an order but has no references to inventory item

When a piece has a reference to item, it's possible to consider inventory as a source of truth. Otherwise

UIREC-148 flow details

As it was discussed , the piece workflow is more complex than it seemed initially. The reason is that there can be cases when a copy of piece in either Orders or Inventory can be considered as the single source of truth depending on scenario.

This section is aimed to document known use cases as the basis for further analysis.

| # | Use case | Is there a copy of the piece in Order? | Is there a copy of the piece in Inventory? | Comments |

|---|---|---|---|---|

| 1 | A piece is created via Orders without Create Item checkbox set | Yes | No | There's the only piece stored in Orders; no need in synchronization |

| 2 | A piece is created via Orders with Create Item checkbox set Piece status is EXPECTED | Yes | Yes | There are 2 copies of the piece in 2 apps so they are to be synchronized Note that an item here can be considered as a stub because a piece is not received yet; so piece in Orders is more real |

| 2.1 | A piece in updated in Orders | updates are to be synchronized from Orders to Inventory | ||

| 2.2 | A piece is updated in Inventory | |||

| 3 | A piece is received (checked in in Receiving App) Piece status if RECEIVED | Yes | Yes | There are 2 copies of the piece in 2 apps Note that an item here is real now because a piece is received |

| 3.1 | A piece in updated in Orders | an user can see all the data but these Enumeration & Chronology fields are not editable | ||

| 3.2 | A piece is updated in Inventory | updates are to be synchronized from Inventory to Orders | ||

Meeting notes

The status of the piece record - EXPECTED or RECEIVED

- EXPECTED - orders is the source of truth

- not 100% but for the most cases it's true e.g. exception - adding a barcode ('cause barcode is only stored in inv item)

- but as for Enumeration & Chronology we can assume Receiving app is the best guy to trust

- Receiving app has an option to update barcode as well though it invokes Inventory API for that, and Receiving App doesn't store barcode

- Do we need to make ongoing sync from Receiving App to Inventory while EXPECTED status? - Yes

- just to note - these Enumeration & Chronology fields are to be considered

- What about suppress for discovery?

- RECEIVED - inventory item is the source of truth

- ideally, the changes in inv items are to be synced to orders

- the reason is that some orders r always opened (it's a kinda series of orders)

- for UX - an user can see all the data but these Enumeration & Chronology fields are not editable