Temporary Kafka security solution

Business purpose

Ideally, Folio should have a single authentication and authorization system for all its components including Kafka so that everything could be configured and available in a single place.

To make this happen a custom security solution should be implemented for Kafka to integrate it with Folio authentication and authorization. The Folio should provide capabilities to easily integrate the new components with its security system.

However, the implementation of the custom solution should take more time than the usage of out of the box Kafka security features.

For that reason, the temporary Kafka security solution is designed.

It is designed for features that:

leverage direct Kafka connection instead of the usage of mod-pubsub

should be released before the preferred Kafka security design is finished and approved.

The temporary solution should satisfy the following criteria:

- It should be relatively easy to implement the solution.

For this purpose, Kafka out of the box security features should be used as much as possible. - It should be secure enough so that it could be used in production.

Only production-ready security features should be leveraged. - It should take into account all security aspects that the development team should take care of.

Security measures that are the responsibility of hosting providers are out of the scope of this solution.

High-level solution overview

Kafka security includes the following areas. The preferred security feature is mentioned for each area in short:

Authentication

Kafka authentication: SASL/SCRAM-SHA-256

Zookeeper authentication: SASL/DIGEST-MD5Authorization

Kafka authorization: kafka.security.authorizer.AclAuthorizerData in transit encryption

Use TLS (SSL) for encryption of traffic between:

- Kafka clients and brokers

- Kafka brokers and Zookeeper nodesData at rest encryption

It is the responsibility of a hosting provider to select, deploy and configure Data at rest encryption solution for Kafka.

Data at rest encryption is out of the scope of this solution.

See a description of each security feature and the rationale behind the selection below.

Authentication

There are several cases that require authentication:

Client connections to Kafka brokers

Kafka inter-broker connections

Kafka tools authentication

Kafka broker connections to Zookeeper

- Zookeeper node-to-node authentication for a leader election

Out of the box, Kafka provides the following authentication approaches:

- mTLS

In this case, a certificate should be issued for each client and Kafka broker. Each certificate should be rotated with the new one periodically to provide stronger security, especially on the client side.

The certificates could be issued by using Kubernetes Certificates API. It obtains X.509 certificates from Certificate Authority (CA).

The Certificate Authority is not part of Kubernetes and should be configured separately by each hosting provider.

Comparing with the SASL/SCRAM-SHA-256, this is the more complex approach. - SASL/GSSAPI (Kerberos) - starting at version 0.9.0.0

There is no Kerberos server currently that is part of the Folio and it will take time to account for it in design, deploy and configure one. This complicates the overall solution and complicates Folio configuration by hosting providers.

This approach shouldn't be used for the solution because of the complexity. - SASL/PLAIN - starting at version 0.10.0.0

From the Kafka documentation:

Kafka supports a default implementation for SASL/PLAIN which can be extended for production use.

The default implementation of SASL/PLAIN in Kafka specifies usernames and passwords in the JAAS configuration file.

Storing clear passwords on disk should be avoided by configuring custom callback handlers that obtain username and password from an external source.

As result, this approach should be extended before using it in production. This can take extra time and hence make this approach less preferable than SASL/SCRAM-SHA-256 and SASL/SCRAM-SHA-512. - SASL/SCRAM-SHA-256 and SASL/SCRAM-SHA-512 - starting at version 0.10.2.0

The default SCRAM implementation in Kafka stores SCRAM credentials in Zookeeper and is suitable for use in Kafka installations where Zookeeper is on a private network.

It is the responsibility of the hosting provider to setup a private network for the Zookeeper.

Client credentials may be created and updated dynamically and updated credentials will be used to authenticate new connections.

This is the more preferred authentication approach for the temporary solution comparing with others listed here, because:- It is secure enough to be used in Production

- It is easier to leverage it, comparing with the other approaches. It doesn't require any additional components to be introduced into Folio or any custom logic implemented.

- SASL/OAUTHBEARER - starting at version 2.0

Kafka out of the box implementation of this solution shouldn't be used in production.

From the Kafka documentation:

The default OAUTHBEARER implementation in Kafka creates and validates Unsecured JSON Web Tokens and is only suitable for use in non-production Kafka installations.

A custom solution should be implemented instead and integrated with the rest of the Folio security, which can take more time than the usage of other out of the box solutions.

Out of the box, Zookeeper provides the following authentication approaches:

- mTLS

See mTLS description for Kafka above. - SASL/Kerberos

See SASL/GSSAPI (Kerberos) description for Kafka above. - SASL/DIGEST-MD5

This is the more preferred authentication approach for the temporary solution comparing with others listed here due to relative simplicity.

Considering all arguments above, SASL/SCRAM-SHA-256 and SASL/SCRAM-SHA-512 Kafka authentication should be used for the following cases:

Client connections to Kafka brokers

Kafka inter-broker connections

Kafka tools authentication

SASL/DIGEST-MD5 Zookeeper authentication should be used for the following cases:

Kafka broker connections to Zookeeper

- Zookeeper node-to-node authentication for a leader election

Authorization

Kafka ships with a pluggable Authorizer. The Authorizer is configured by setting authorizer.class.name in server.properties file.

AclAuthorizer is the out of the box Authorizer implementation that should be used in the temporary solution. It uses Zookeeper to store all the Acess control lists (ACLs).

To enable the out of the box implementation specify the following in the server.properties file:

authorizer.class.name=kafka.security.authorizer.AclAuthorizer

ACLs are used to define rights to Kafka resources.

Kafka ACLs are defined in the general format of:

Principal P is [Allowed/Denied] Operation O From Host H on any Resource R matching ResourcePattern RP.

Principal:

When a user passes SASL/SCRAM-SHA-256 authentication (preferred Kafka authentication approach for now), his/her username is used as the authenticated Principal for authorization.

Since the temporary Kafka security solution isn't integrated with the Folio security solution, for now, Kafka users should be created independently from Folio. The temporary solution doesn't assume mapping between Folio "system users" and Kafka users.

Operation examples:

- Read

- Write

- Create

- Delete

- Alter

- Describe

- Cluster action

Resource examples:

- Topic

- Cluster

- Consumer group

ResourcePattern

Kafka supports a way of defining bulk ACLs instead of specifying individual ACLs. The current supported semantic of resource name in ACL definition is either full resource name or special wildcard '*', which matches everything.

Example:

Principal “UserA” has access to all topics that start with “com.company.product1.”

More info about ResourcePatterns: https://cwiki.apache.org/confluence/display/KAFKA/KIP-290%3A+Support+for+Prefixed+ACLs

ACL should be created for each Principle with the principle of least privilege in mind.

By default, all principals that don't have an explicit ACL that allows access for an operation to a resource are denied. In rare cases where an allow ACL is defined that allows access to all but some principal we will have to use the --deny-principals and --deny-host option.

In order to add, remove or list ACLs the Kafka authorizer CLI should be used.

Example of ACL creation command:

bin/kafka-acls.sh --authorizer kafka.security.authorizer.AclAuthorizer --authorizer-properties zookeeper.connect=localhost:2181 --add --allow-principal User:ModDataImportSystemUser --allow-hosts * --operations Read,Write --topic Test-topic

Example of Kafka user and ACL usage in Folio:

In order to import Marc records, the mod-data-import module needs Read access to Kafka Topic A and Write access to Topic B.

To reach this goal:

- A new Kafka user ModDataImportSystemUser should be created. His credentials should be added to the mod-data-import configuration. The credentials should be stored safely, for instance, by using Secrets manager. (out of the scope of this solution).

- A new ACL should be created for the user ModDataImportSystemUser. The ACL should grant Read access to Topic A and Write access to Topic B.

To access Topics A and B mod-data-import should leverage provided Kafka user credentials. If mod-data-import needs to access other Folio modules, it should use Folio credentials.

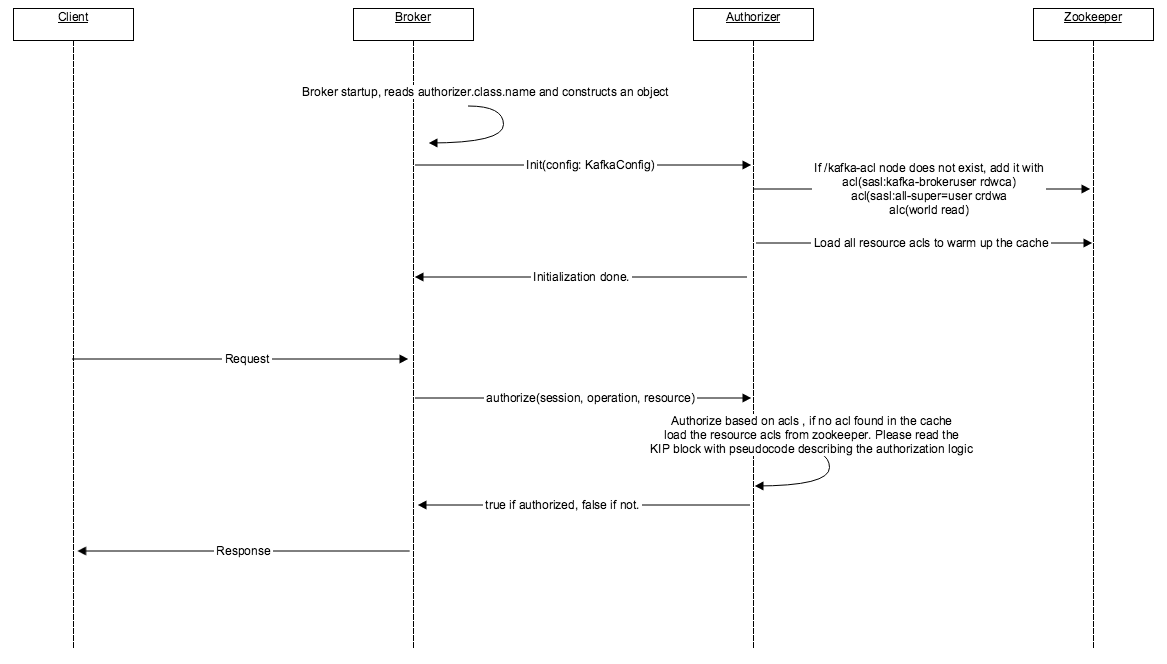

Kafka authorization sequence diagram (for more info, see https://cwiki.apache.org/confluence/display/KAFKA/KIP-11+-+Authorization+Interface#KIP11AuthorizationInterface-DataFlows):

The following Kafka documentation section contains more details about ACLs creation and authorization configuration: https://kafka.apache.org/documentation/#security_authz.

Data in transit encryption

Apache Kafka allows clients to use TLS (SSL) for encryption of traffic.

SASL/SCRAM-SHA-256 (preferred Kafka authentication approach for now) should be used only with TLS-encryption to prevent interception of SCRAM exchanges. This protects against dictionary or brute force attacks and against impersonation if ZooKeeper is compromised.

More info about Kafka TLS encryption: https://kafka.apache.org/documentation/#security_ssl

It is the responsibility of the hosting providers to create, sign certificates for TLS and update Folio configuration to use them.

Note that there is a performance degradation when TLS is enabled, the magnitude of which depends on the CPU type and the JVM implementation.

The performance degradation though can be overcome by changing CPU type (scaling vertically) or increasing the number of Kafka brokers (scaling horizontally).

Data at rest encryption

Out of the box, Kafka doesn't contain any solutions for Data at rest encryption.

It is the responsibility of a hosting provider to select, deploy and configure Data at rest encryption solution for Kafka.

As result, Data at rest encryption is out of the scope of this solution.

Multi-tenancy

The proposed security solution supports the following levels of data isolation:

- Dedicated Kafka deployment per Tenant

- Dedicated Topic per Tenant - Workload

- Dedicated Kafka topic per Workload.

All Tenant messages are put to a shared Kafka topic in this case. Receiving module separates messages of one tenant from messages of another one by using the Tenant context provided with a message.

For now, the 2nd option is preferred. It assumes the following:

- Each Tenant should have a dedicated set of Topics for all required Workloads. For instance, a Topic for data-import.

- A namespace should be used to distinguish Topics belonging to a Tenant, e.g. <tenant-name>-dataimport.

- We create a dedicated Kafka user for each tenant

Note: usage of dedicated Kafka user for each tenant could be a potential performance/scalability bottleneck for Kafka consumers. - We use ACLs to assign action permissions that tie together the tenant’s Kafka user to the corresponding tenant namespaced topics.

- The module is responsible for managing the Kafka user credentials for each tenant - as a configuration

The Kafka multi-tenancy approach will be described in more detail here: /wiki/spaces/~Vasily/pages/3571890. The selected option could be changed over time as more details are known (see Note for point 3).

Responsibilities

A hosting provider is responsible for:

- Configuration of Kafka to use SASL/SCRAM-SHA-256 for authentication

- Configuration of Kafka to use kafka.security.authorizer.AclAuthorizer for Authorization

- Creation of Kafka users

- Creation of ACLs by using provided ACL templates and assigning them to the Kafka users

- Addition of created Kafka users to appropriate module configuration

- Configuration of ZooKeeper to use SASL/DIGEST-MD5 for authentication

- Configuration of ZooKeeper authorization

- Updation of Kafka configuration so that it could pass ZooKeeper's authentication and authorization

- Configuration of Kafka and ZooKeeper TLS (SSL) encryption of data in transit

- Issuing and providing certificates for Kafka and ZooKeeper TLS (SSL) encryption

- Selection, deployment, and configuration of Data at rest encryption solution for Kafka

Folio community is responsible for:

- Creation of PoC for the described solution

- Update of this guide and responsibilities after PoC implementation, if required

- Creation of ACL templates for Folio modules. Each template should define what permissions module has. The template shouldn't contain information specific to hosting providers (e.g. host, IP address, etc.).

- Providing other templates, guides / scripts that could ease up hosting provider responsibilities