How to write JMeter performance tests

Step-by-step guide

Download JMeter. Unzip the zip/tar file. Run the jmeter.bat (for Windows) or jmeter (for Unix) file from the bin directory.

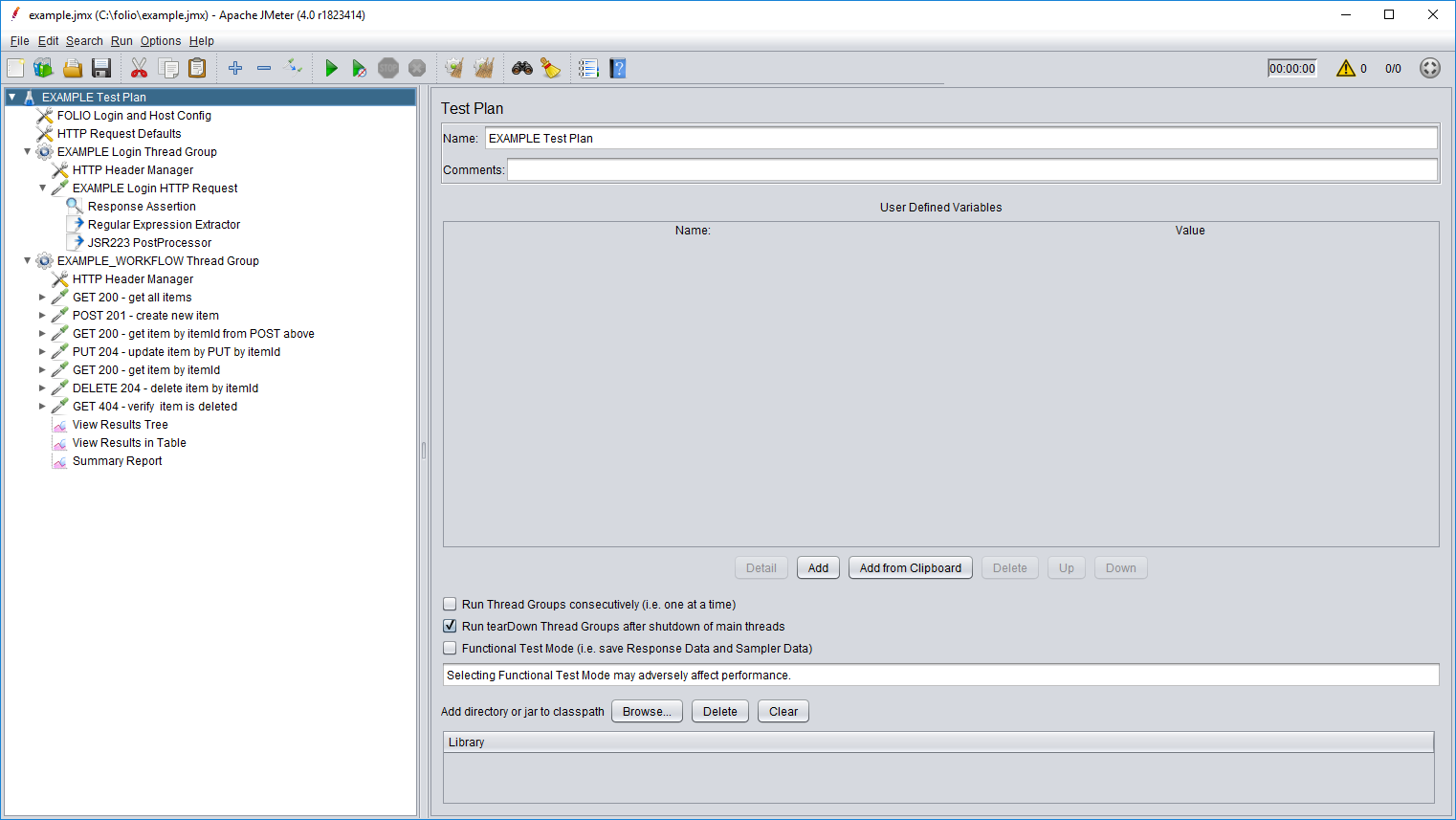

Open the example.jmx file in the JMeter GUI and replace "EXAMPLE" with the actual Test Plan name.

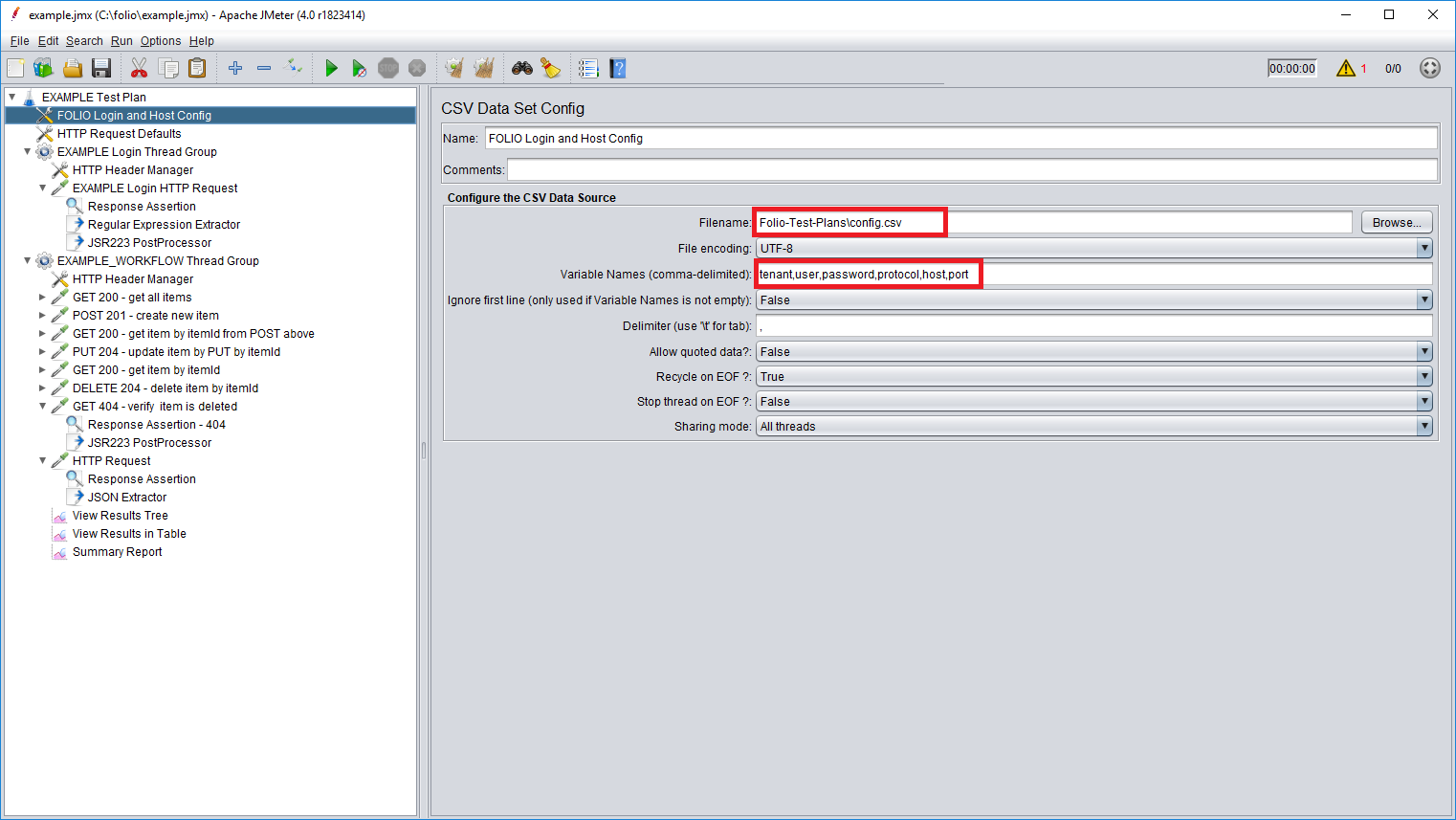

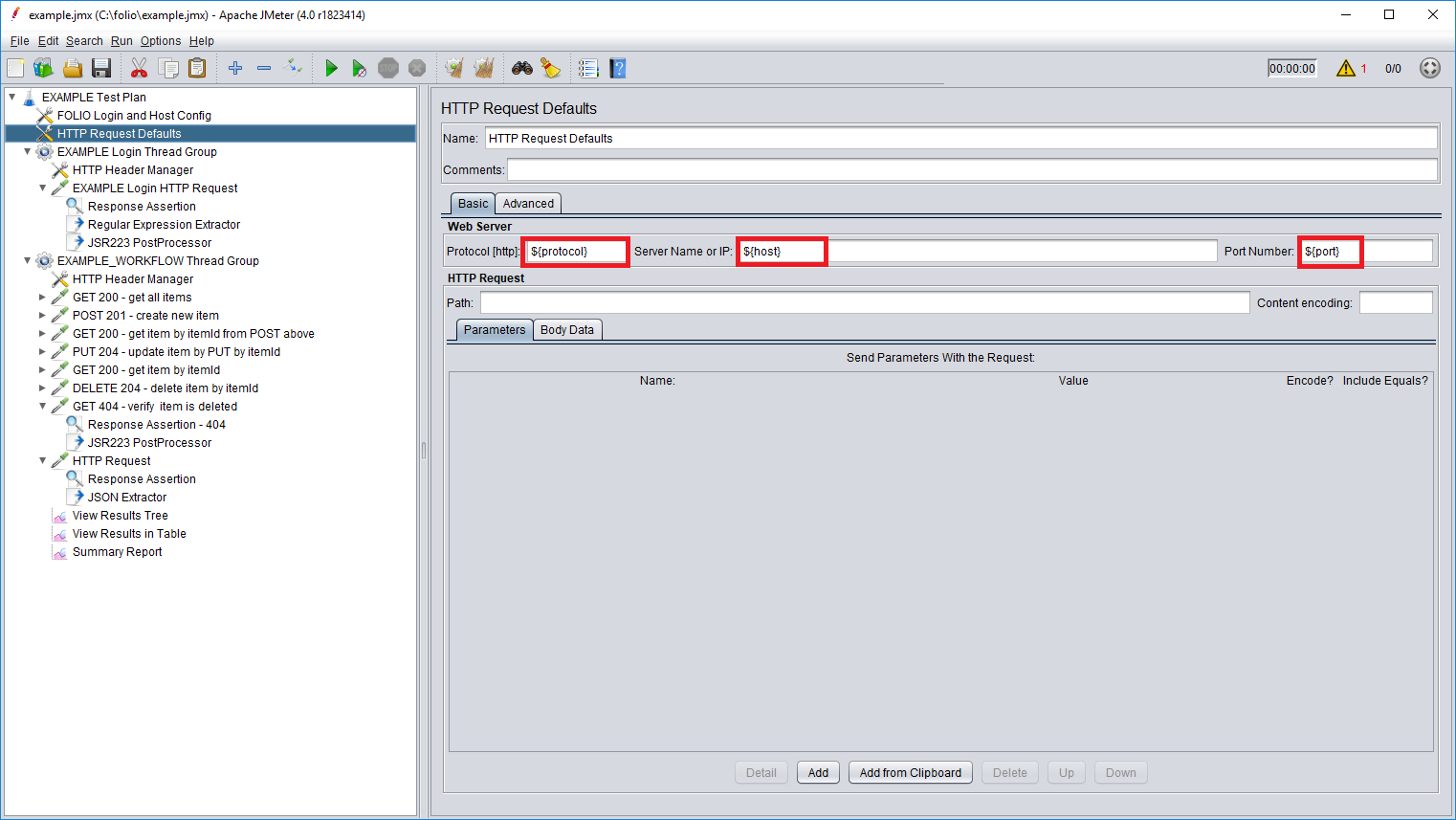

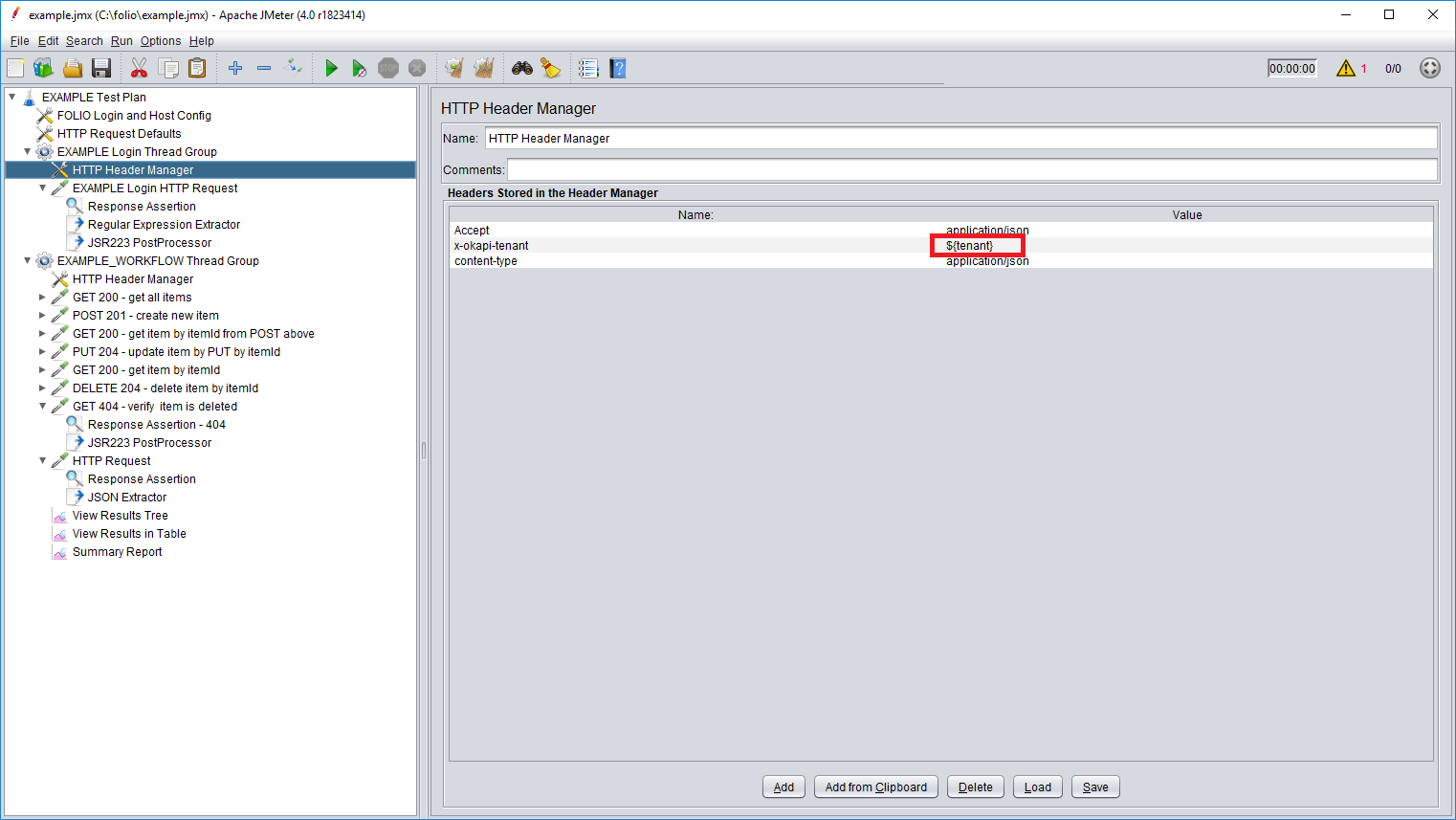

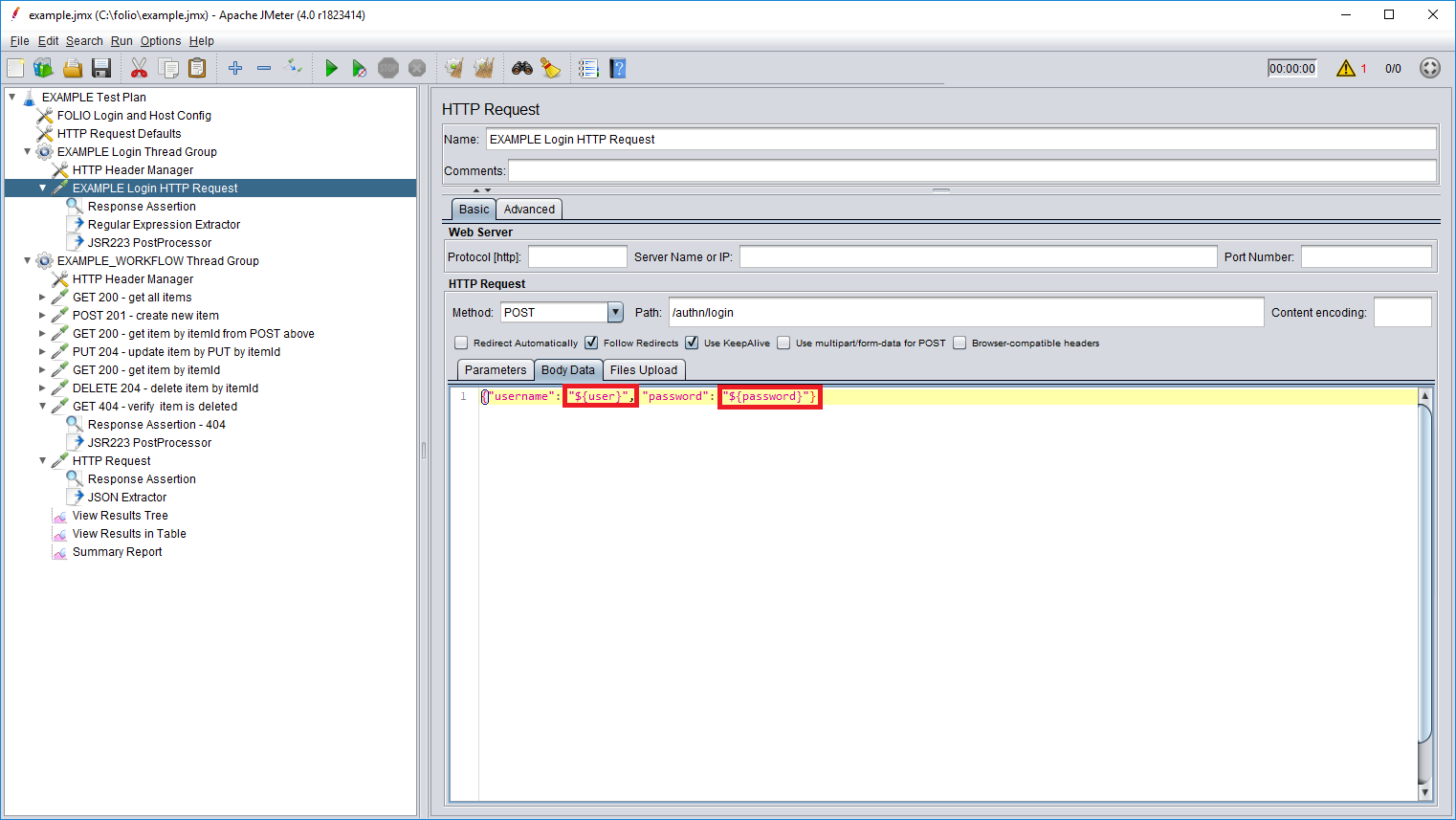

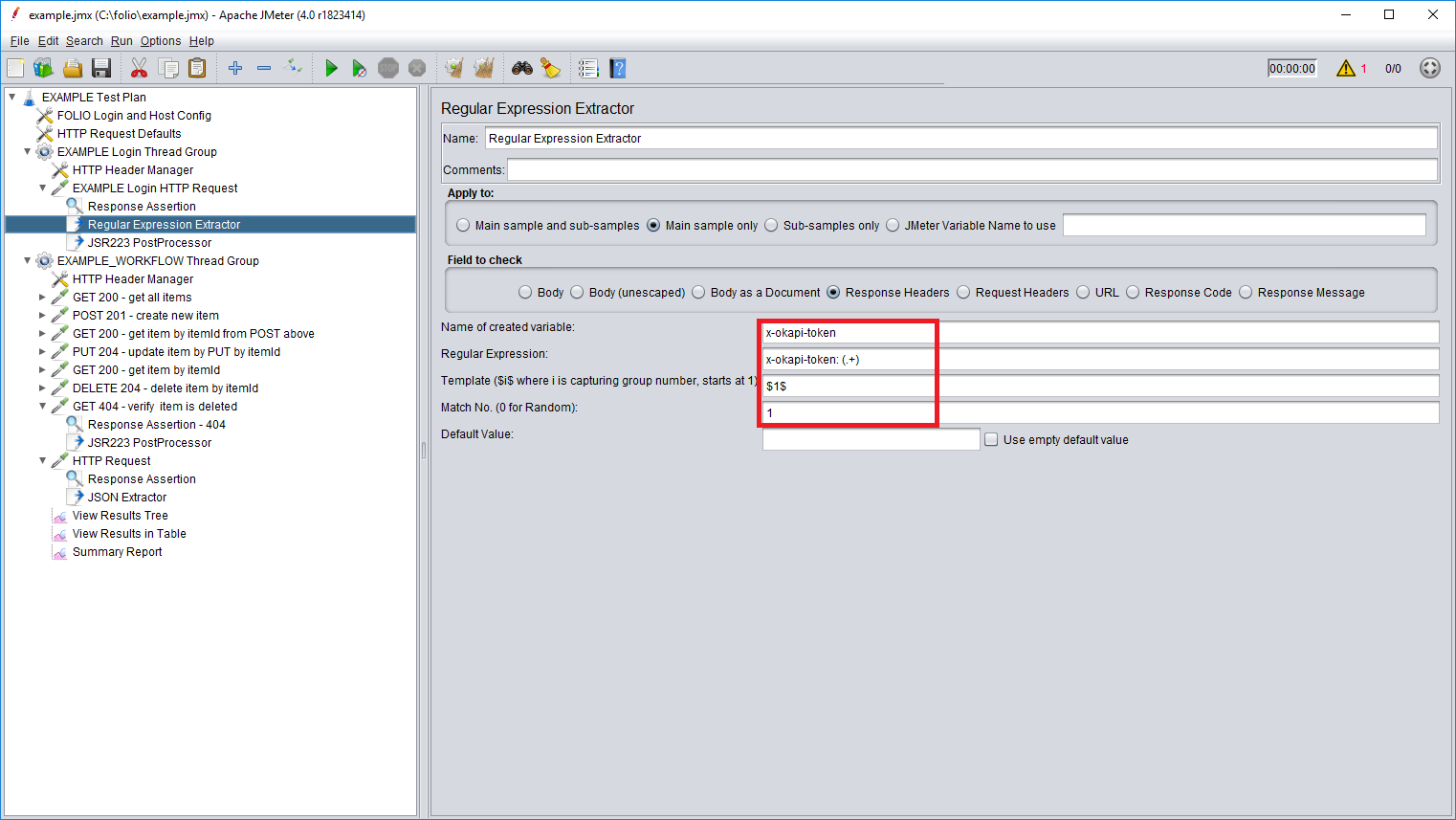

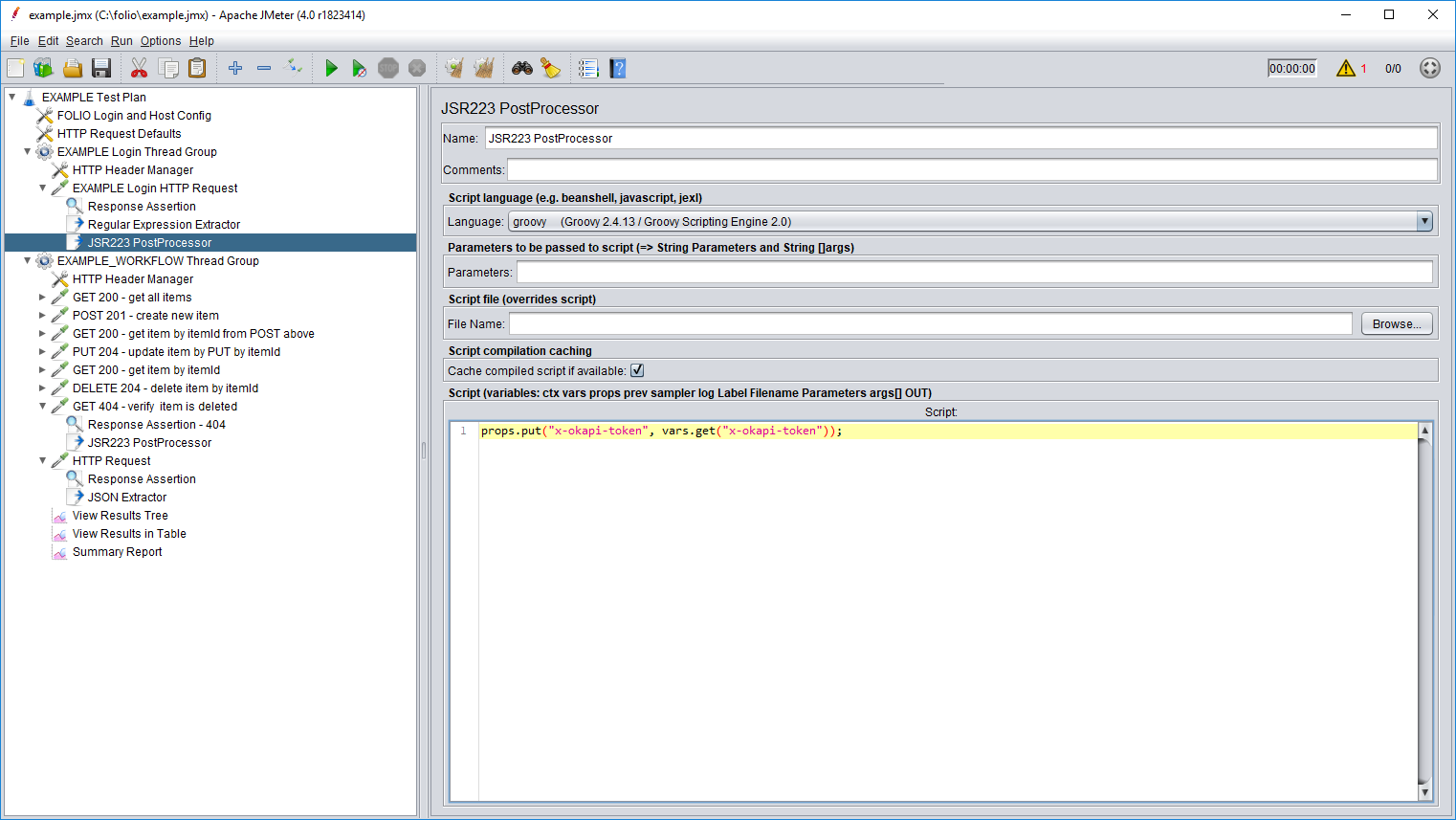

The Example Test Plan contains FOLIO Login and Host Configs specifying the variables names and the path to the file where the actual values are located. Those variables will be referenced from the HTTP Request Defaults and the Login Thread Group.EXAMPLE Login Thread Group contains an EXAMPLE Login HTTP Request and HTTP Header Manager. EXAMPLE Login HTTP Request describes a request sent to obtain an authentication token - POST method is sent to /auth/login endpoint with username and password specified in the Body Data. Response Assertion verifies that 201 code is received in the response. Regular Expression Extractor extracts an x-okapi-token and JSR223 PostProcessor stores that token in the properties in order for it to be used in other requests.

EXAMPLE_WORKFLOW Thread Group contains HTTP Header Manager and a bunch of example HTTP requests describing an example workflow. For simplicity there used some "item" values and example endpoints. Make sure to replace those "items" with actual endpoints and values, or just delete the example requests and create the necessary ones from scratch as shown at steps 3-9.

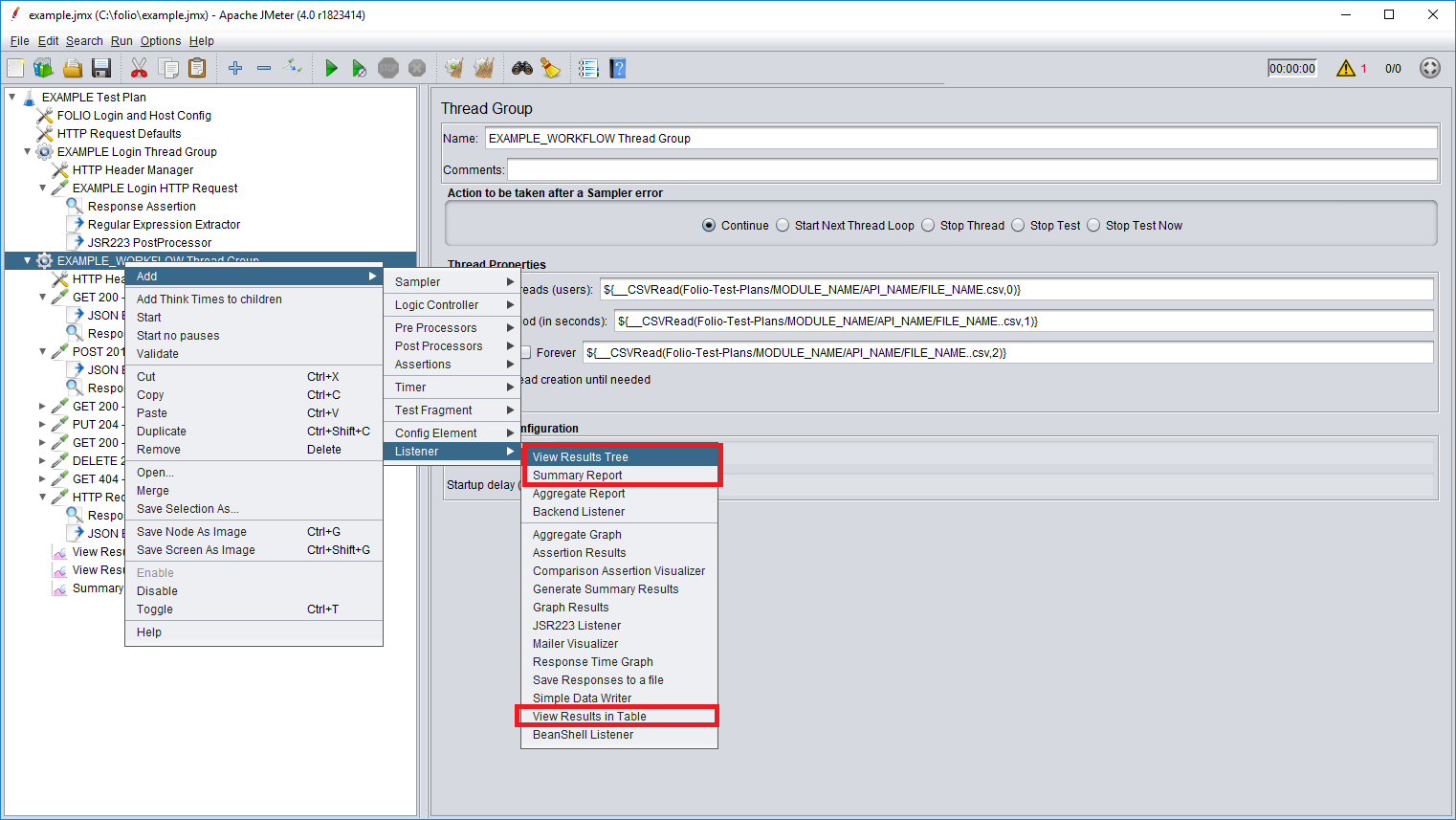

View Results Tree, View Results in Table and Summary Report are Listeners that aggregate information that JMeter collects from requests and responses.

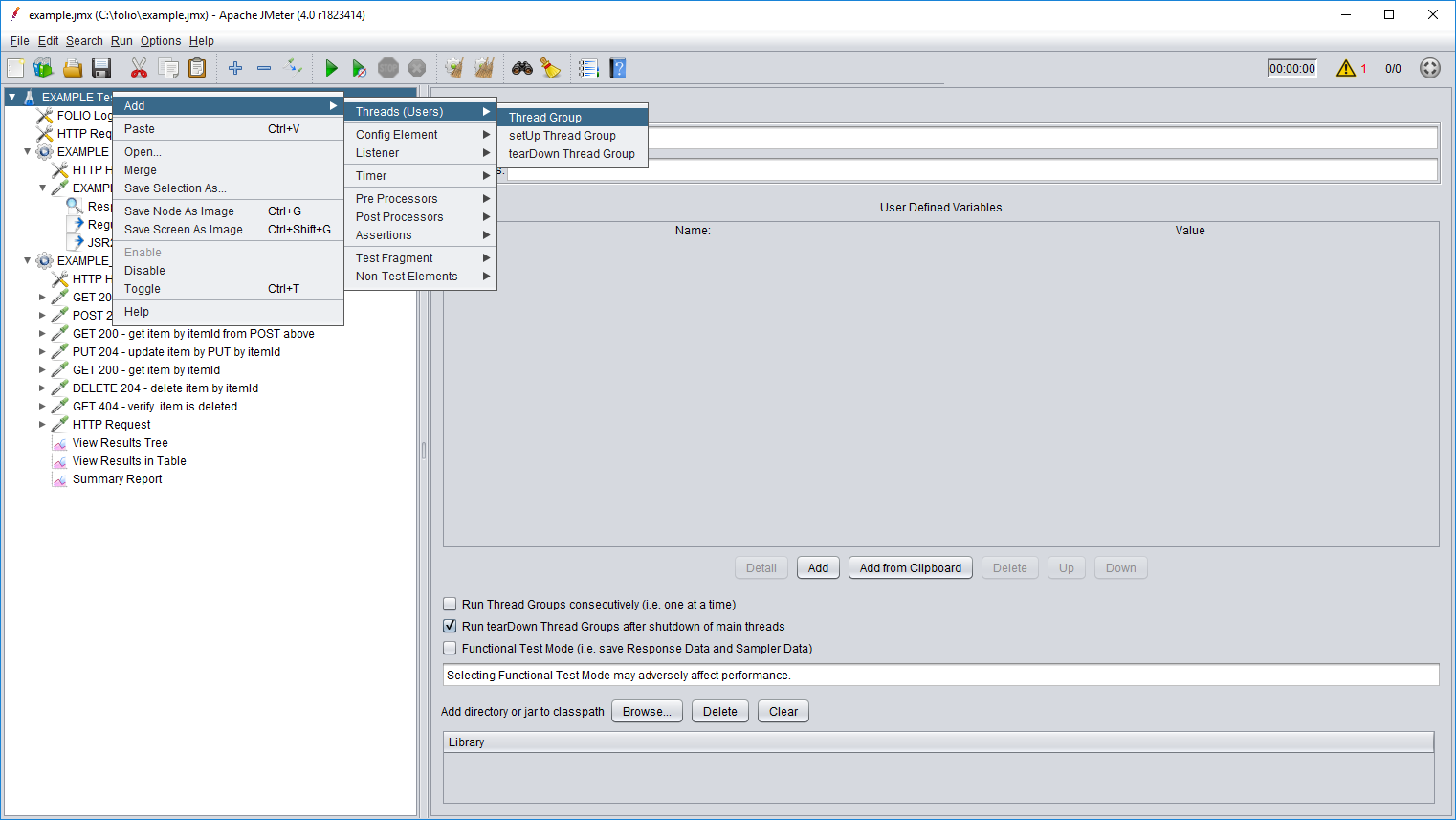

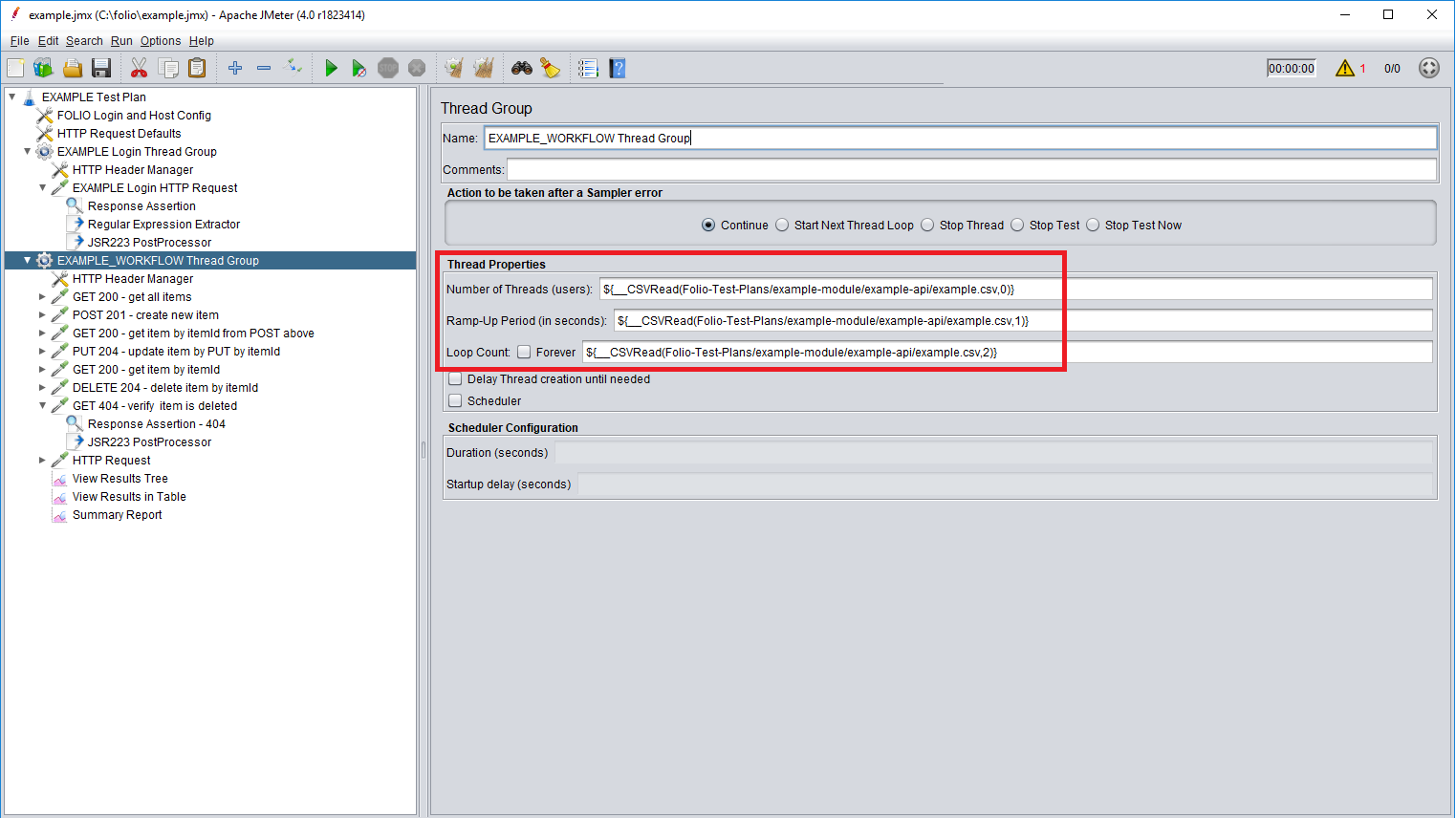

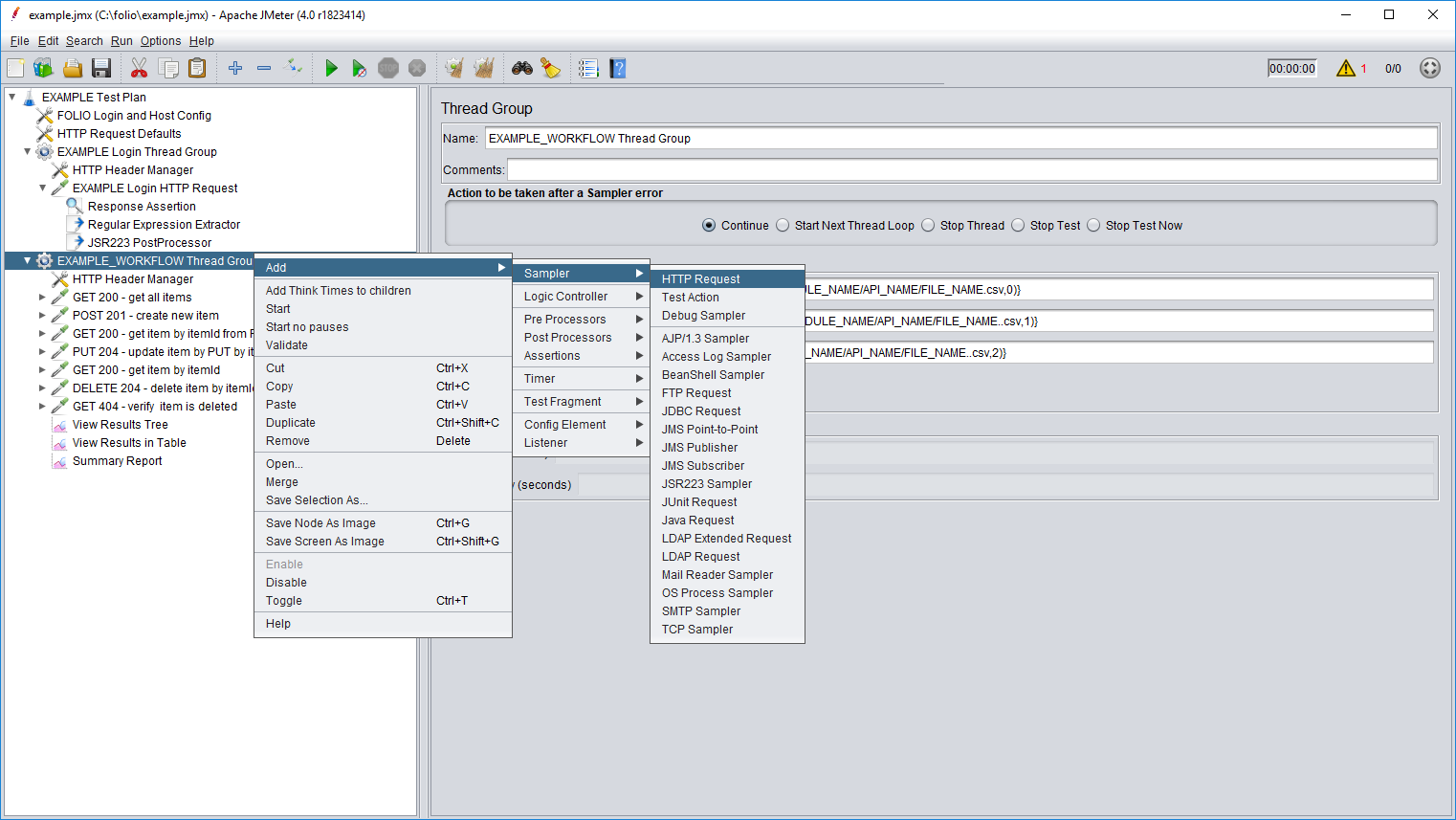

- Add new thread group as shown below or modify the existent EXAMPLE_WORKFLOW by specifying the name of the workflow and the actual thread properties. Number of threads, rapm-up time and loop count can be stored in a separate .csv file that should be put in the same directory as the test script (see step 10). Values in the .csv file should be separated by comma. For example .csv file content "100,5,1" would correspond to 100 threads, 5 seconds of ramp-up time and 1 loop. In the JMeter GUI specify the path to the .csv file as shown on the image below and the index of the value in the file.

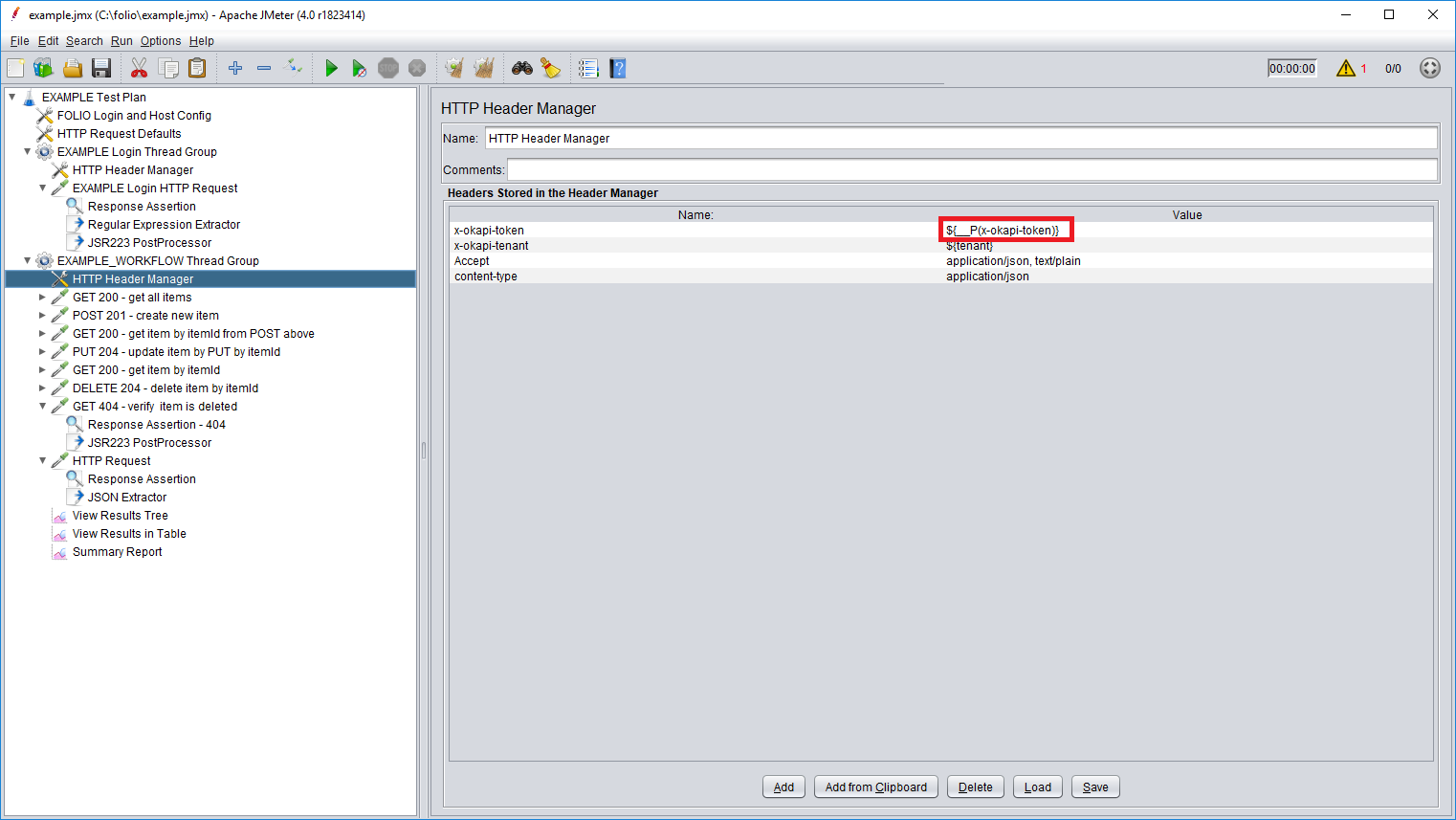

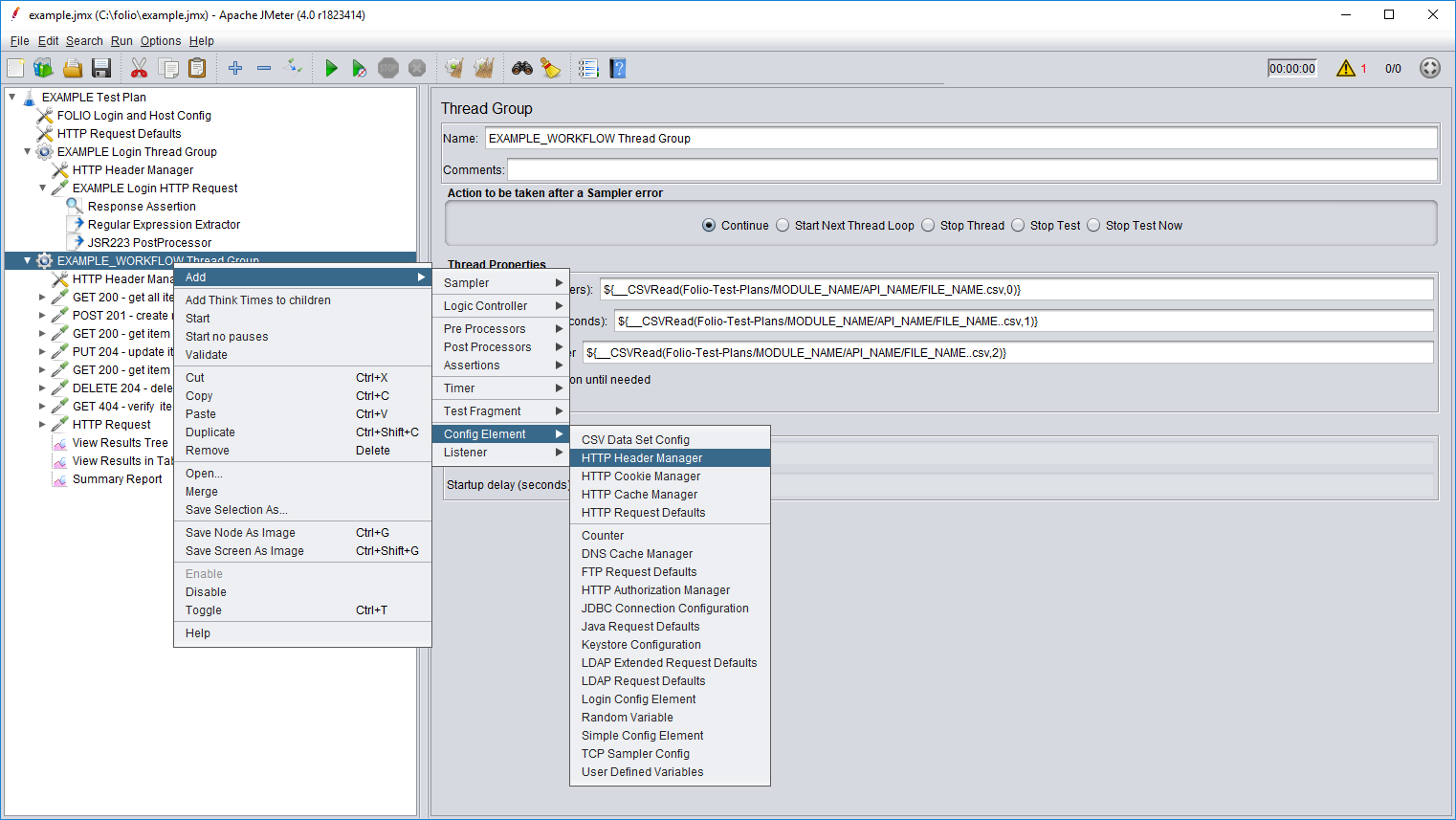

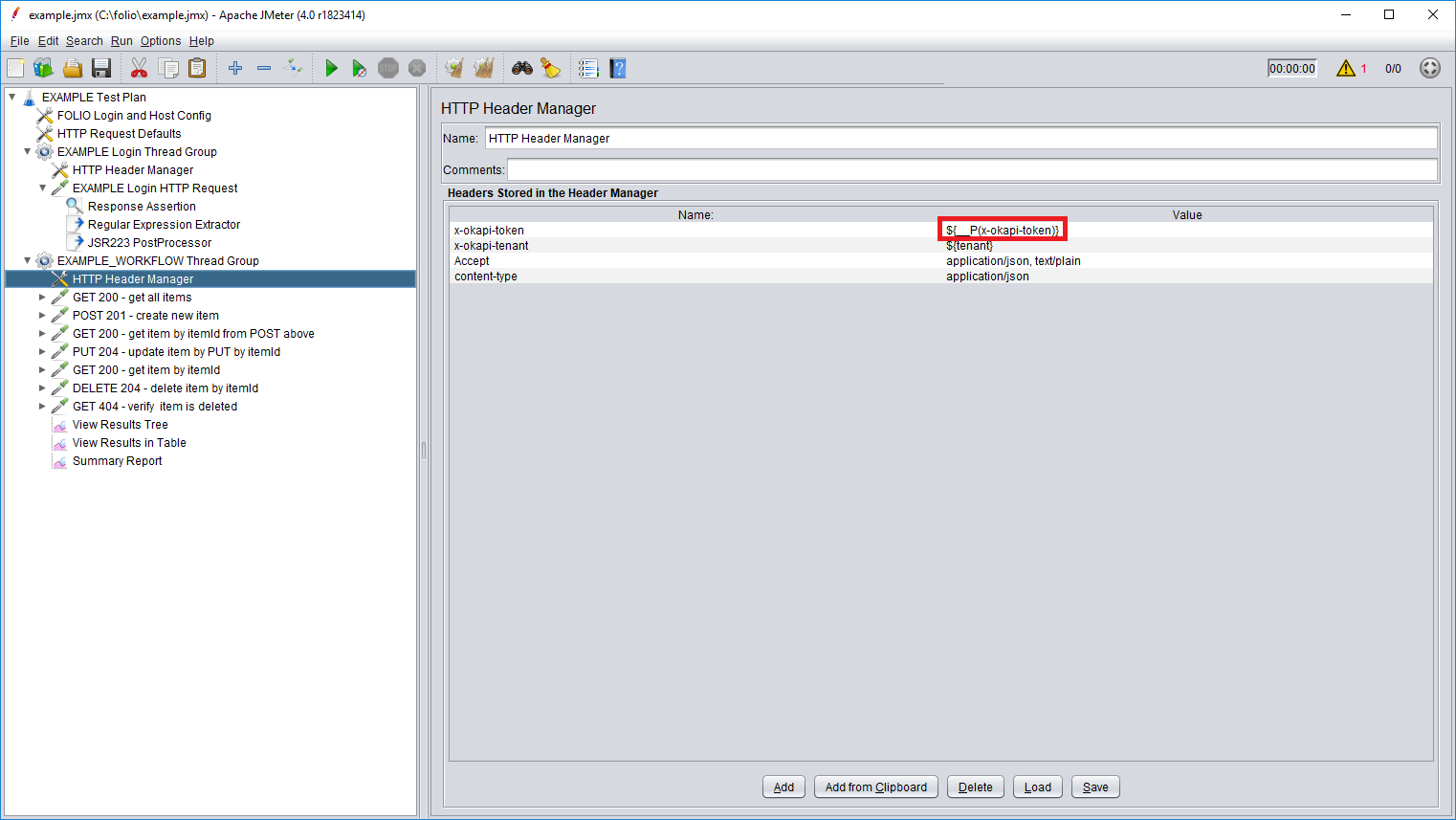

- Add HTTP Header Manager to specify required headers for every request:

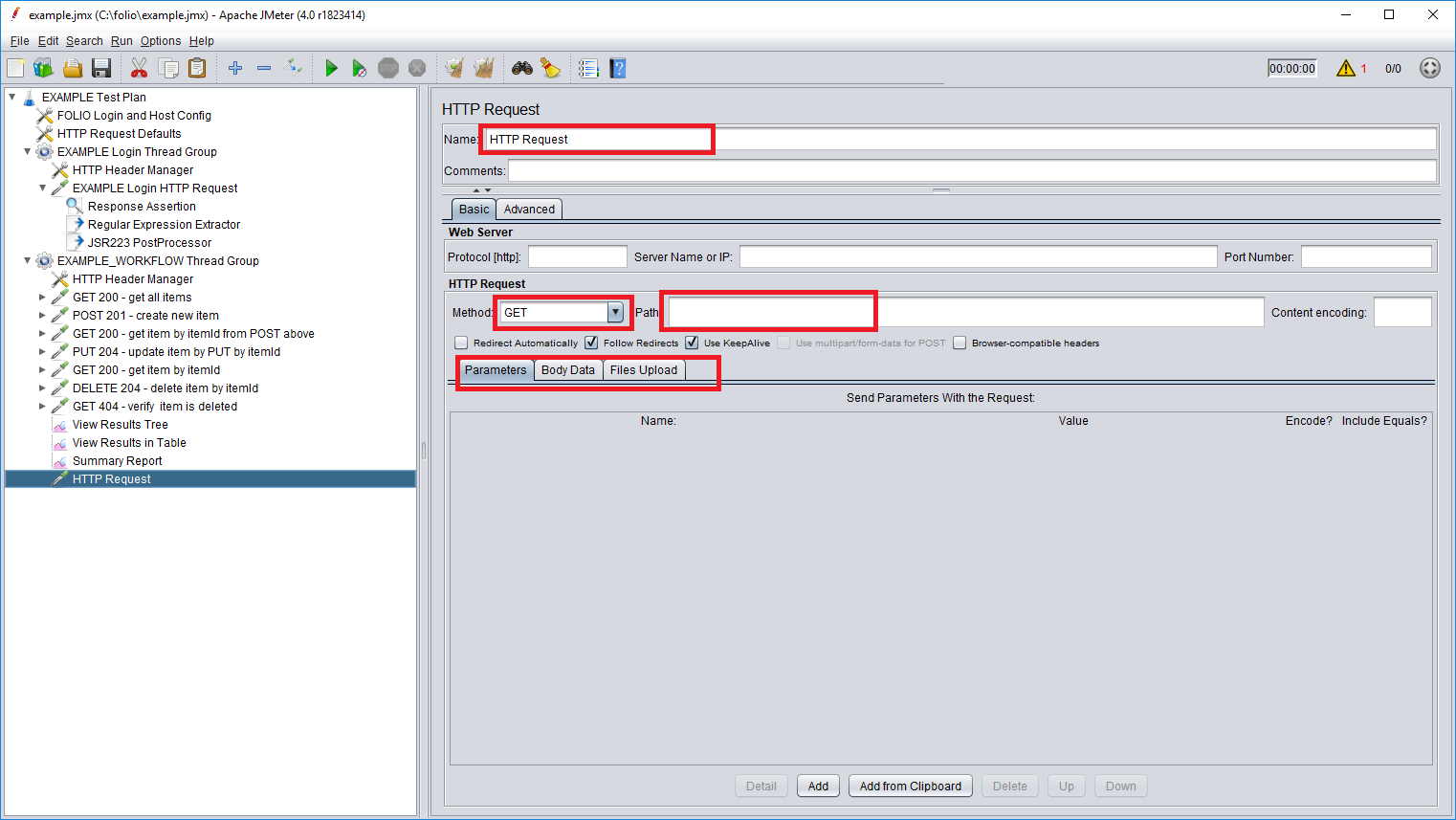

For example x-okapi-token obtained in the previous login request: - Create new http request, specify name, method, endpoint and other necessary parameters and body data.

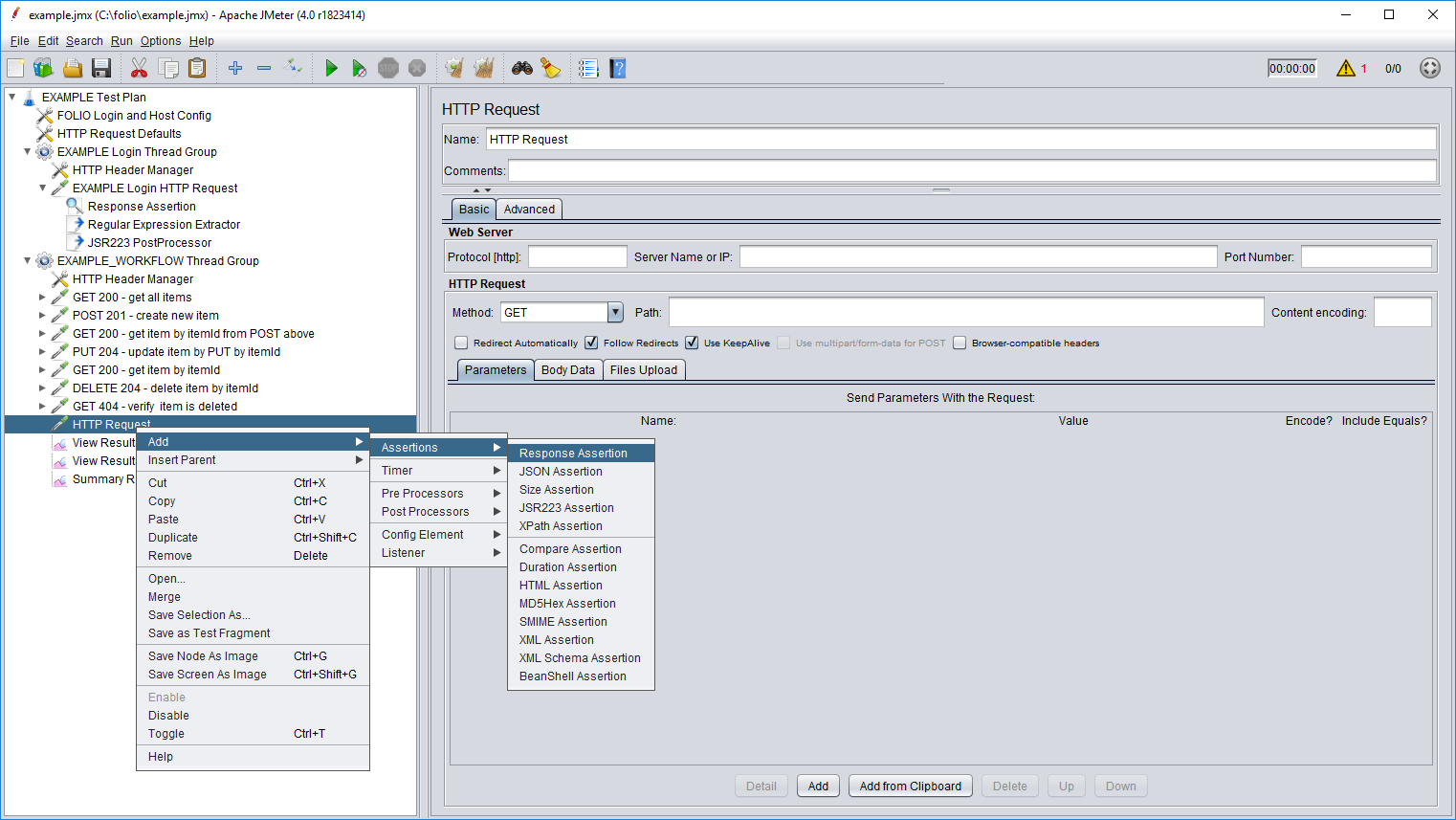

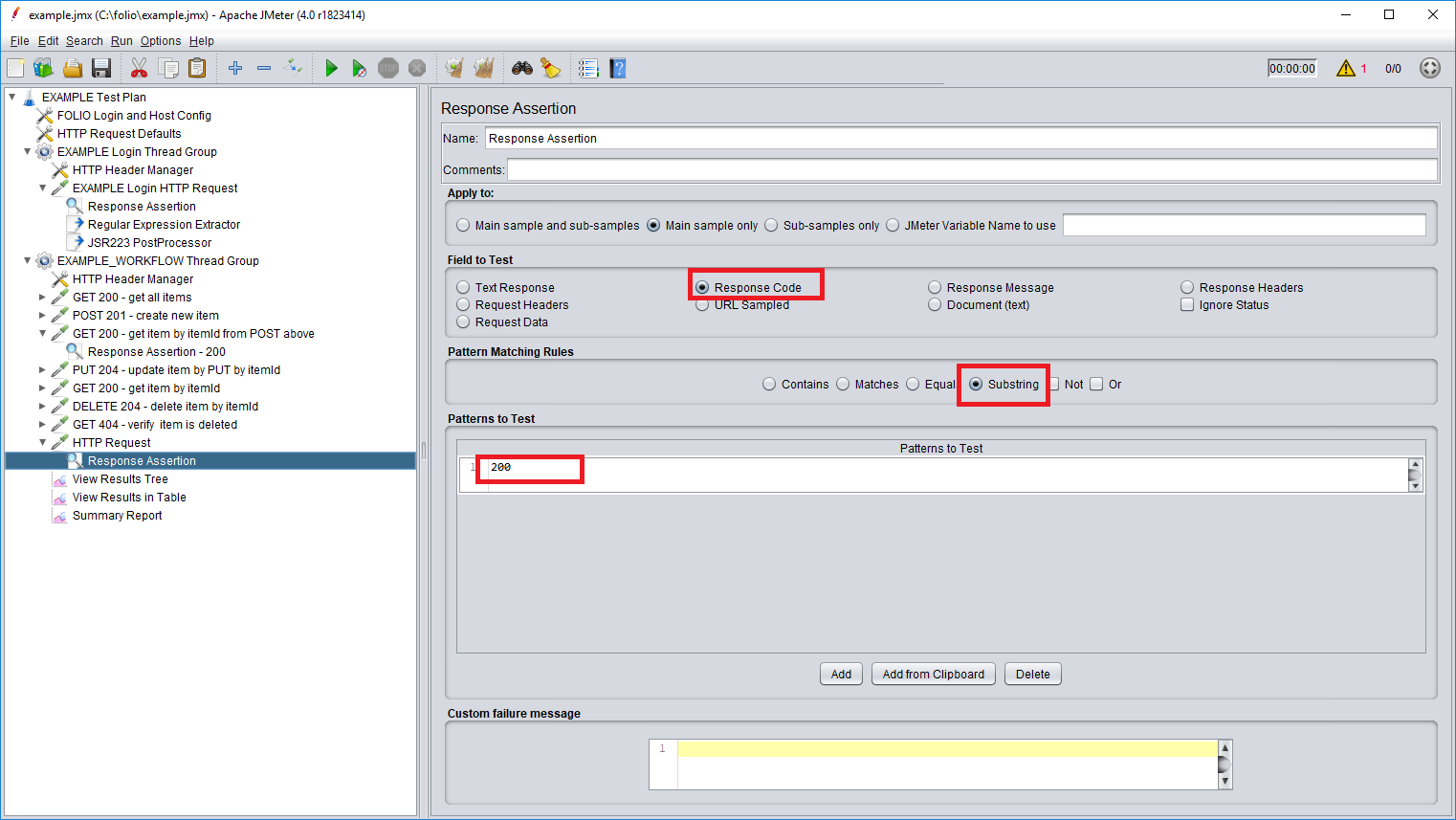

Notice that Web Server configs have already been specified in the HTTP Request Defaults in the EXAMPLE Test Plan. - Add Response Assertion, for example simple response code assertion:

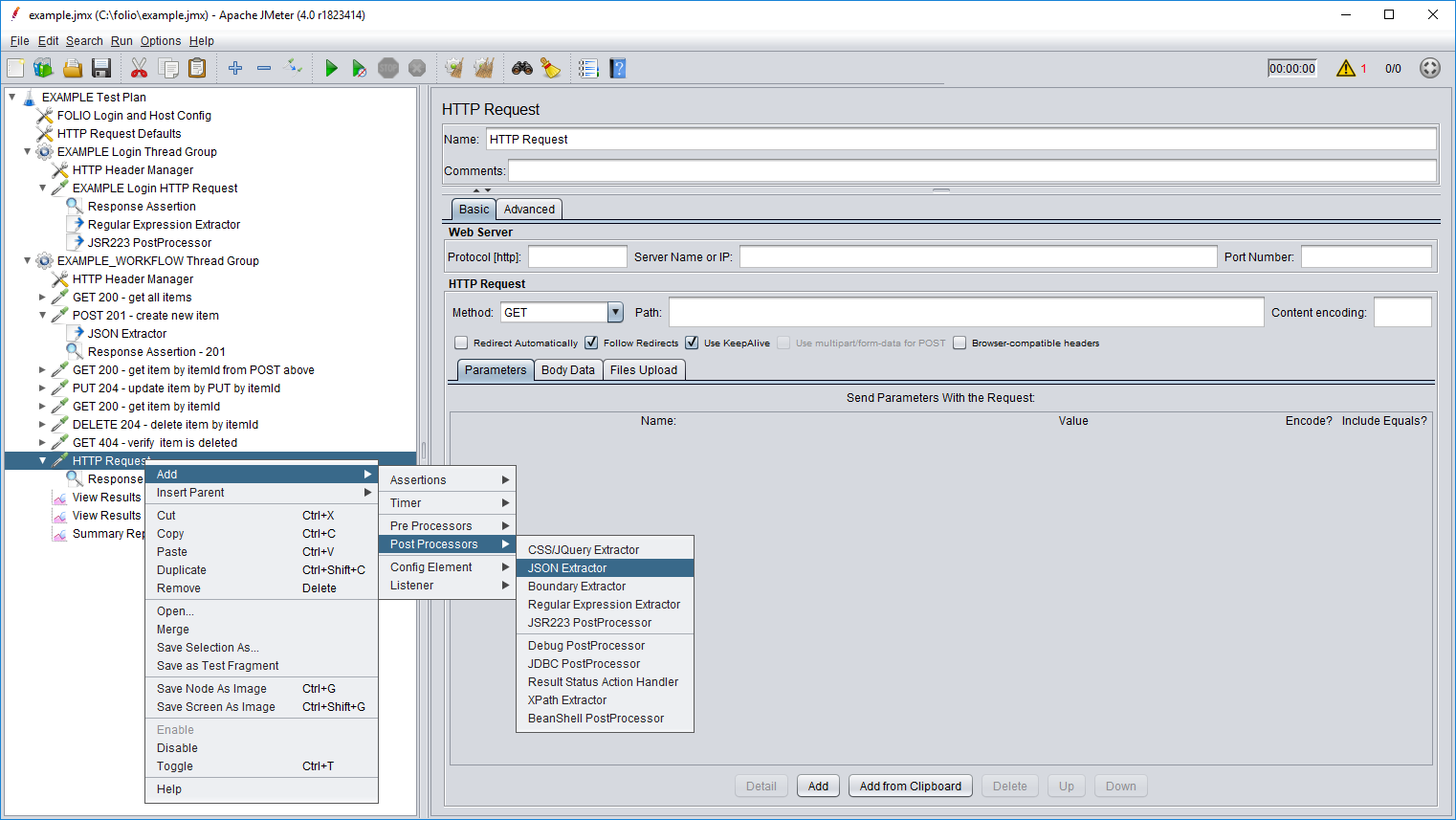

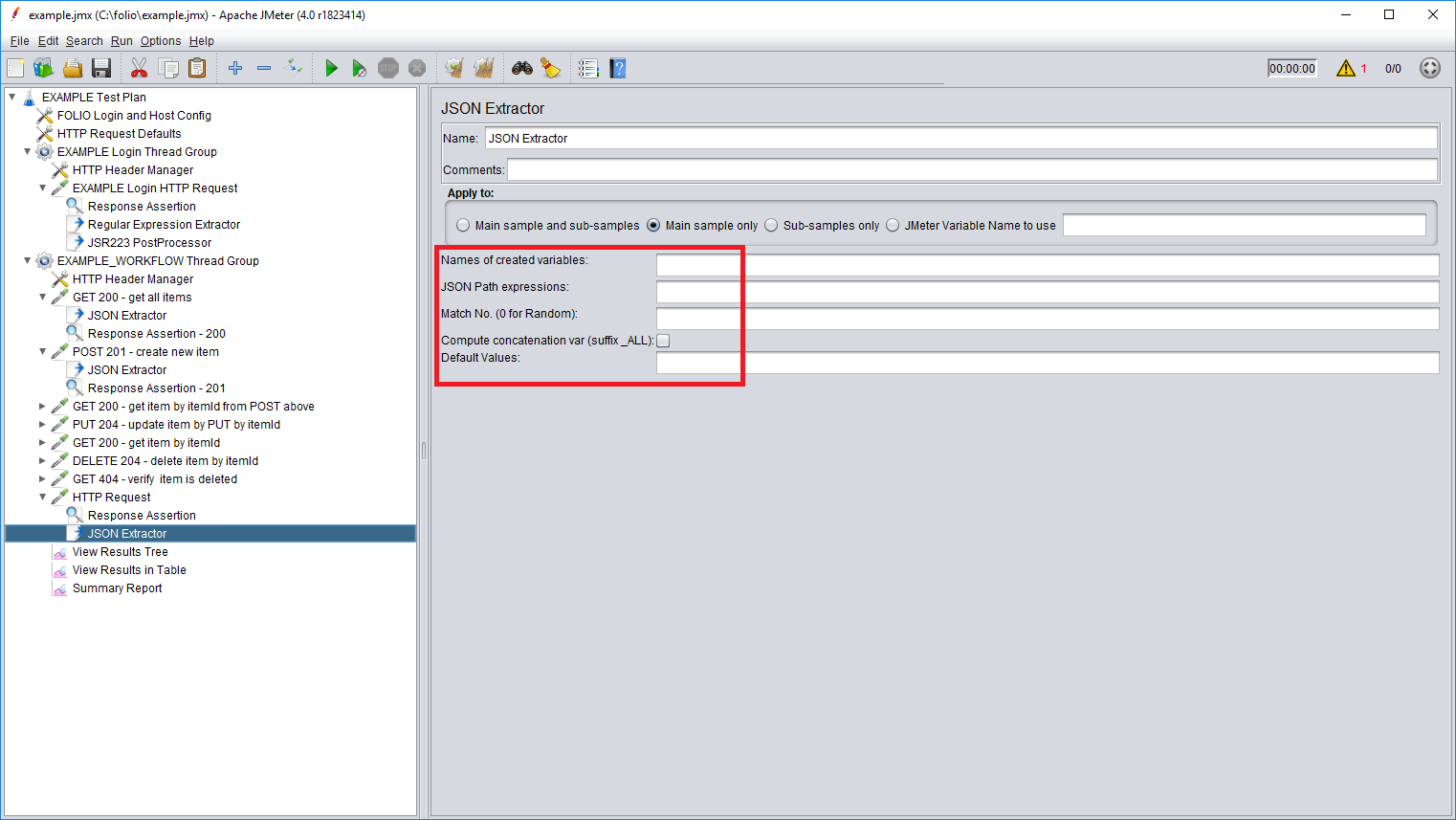

- If there is data that is expected in response, it can be extracted with JSON Extractor. There should be provided names of the variables that would store the extracted values and JSON path expressions. See more examples of how to configure JSON Extractor in the requests from the EXAMPLE_WORKFLOW. For more information on how to write json path expressions visit the link.

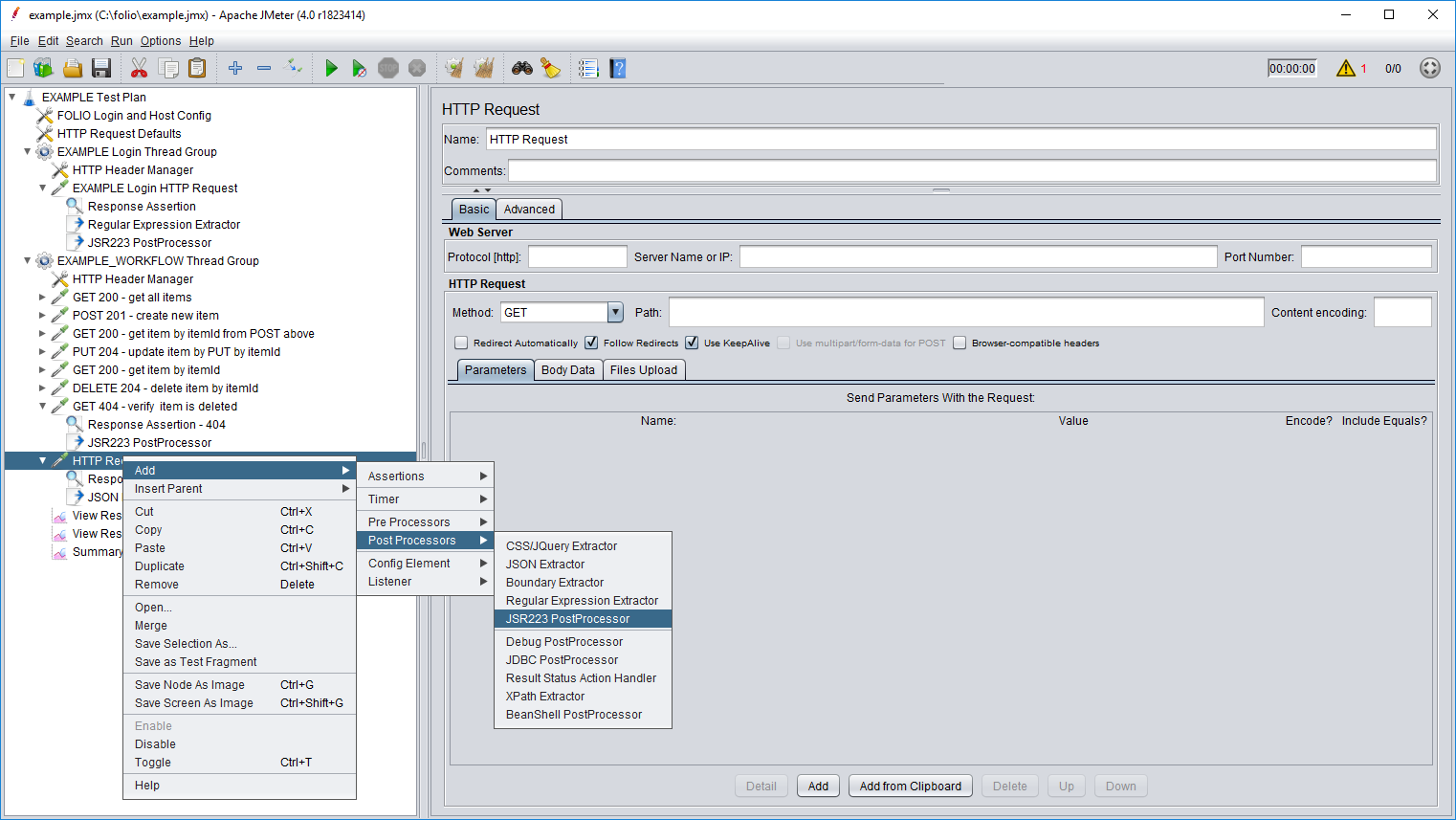

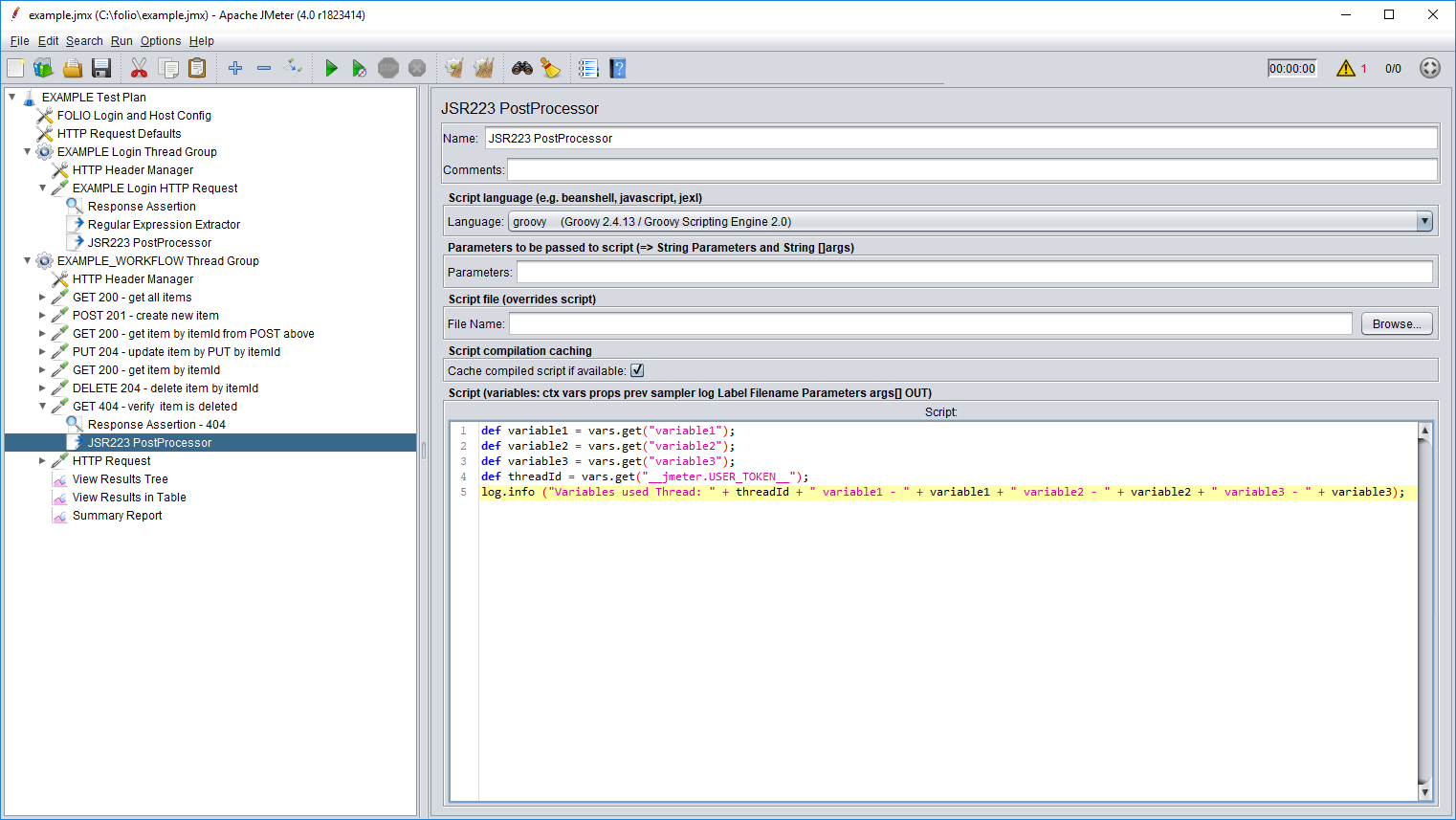

- To store some variables in the properties or add some logging upon finishing the workflow execution other post processors can be used, like JSR223 PostProcessor:

To aggregate the information JMeter collects from requests and responses add listeners, for example:

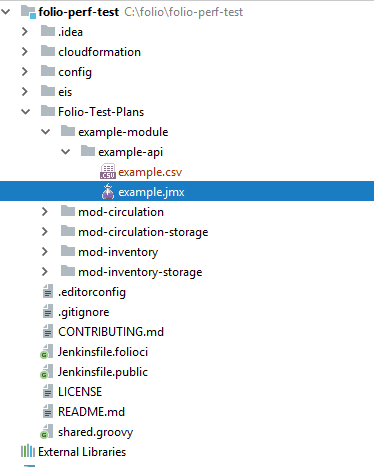

Save the changes, place the .jmx file along with .csv file into the specific directory corresponding to the testing API in the module under the Folio-Test-Plans in the folio-perf-test project. Commit and push the changes.

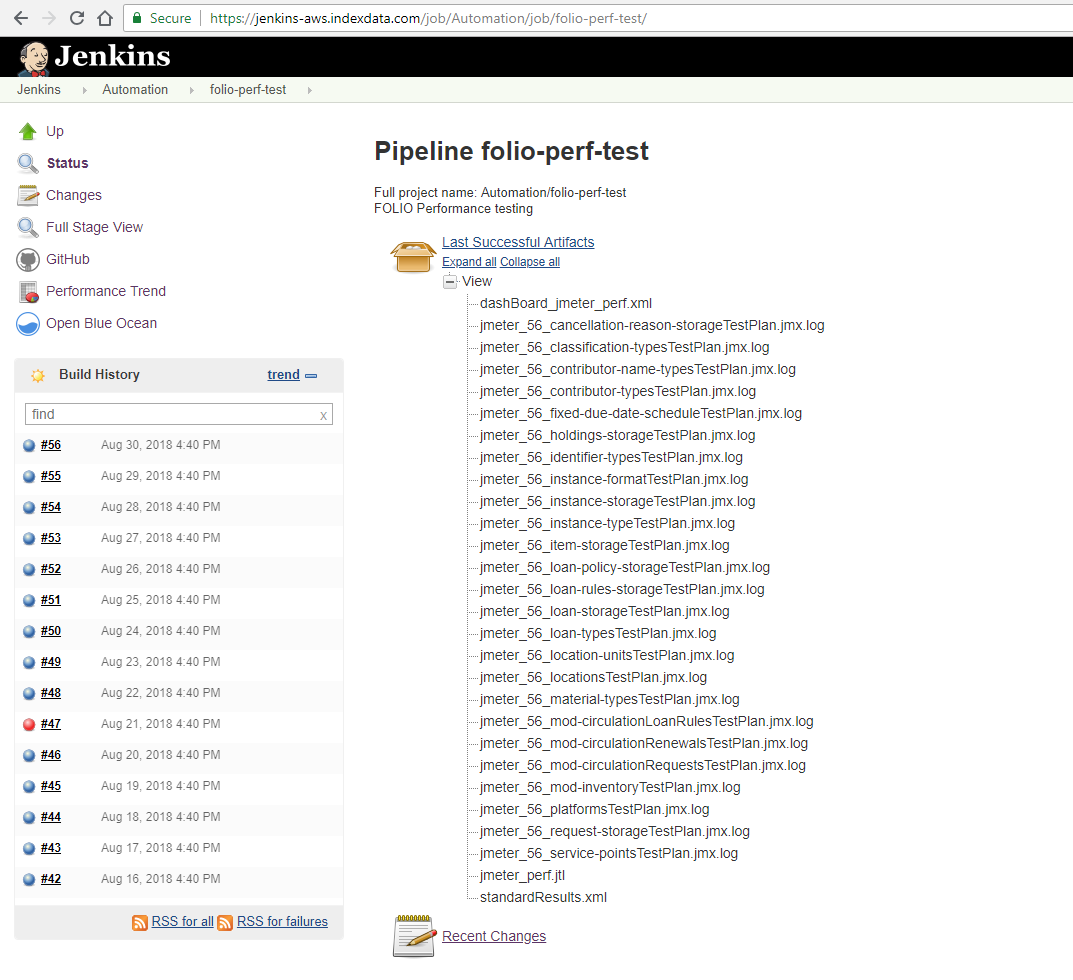

Once pull request is merged the new version of the folio-perf-test project will be checked out by the Jenkins job that runs nightly. After the build is complete you can verify that your test scripts ran along with others by checking the logs: