MODQM-217 Spike: Determine a plan to address persistent 500 errors upon editing a quickMARC record

MODQM-217 - Getting issue details... STATUS

Objectives

- what code change(s) maybe a contributing factor

- how can we prevent 500 errors

- Kafka

- what additional Karate tests are needed

- how can we anticipate these issues rather than have customers identify

- if the issue is related to multi-tenant versus single tenant

- if the issue is related to how the data is loaded (via data import or directly to storage)

- if the issue is related to how the data is updated (via data import, inventory, or MARC authority app UI or directly to storage)

Analysis

500 error causes

| Issue | Cause | Description |

|---|---|---|

| Kafka topics configuration | Kafka consumers try to connect to existing consumer groups that have different assignment strategies. This led to mod-quick-marc doesn't receive any confirmation about successful/failed update. mod-quick-marc has a timeout (the 30s) for confirmation receiving. If the timeout is exceeded then respond with a 500 error. | |

| Related modules are down | The process of update is started in SRM by sending a Kafka event to SRS and then to Inventory. If SRS or Inventory is down then the process couldn't be finished. Consequently, the timeout for confirmation receiving in mod-quick-marc is exceeded. | |

| Kafka topic does not exist | Kafka consumers cannot connect and receive messages because topics do not exist. Consequently, the timeout for confirmation receiving in mod-quick-marc is exceeded. | |

| IDs of records are not consistent | When an SRS record is created firstly it has 2 IDs: record ID and record source ID, and they are the same. After 1st record update record source ID is changed. On the mod-quick-marc side, we expect to receive an event that contains an ID that is equal to the record ID but receives a different ID, because the initial ID was changed. Consequently, the timeout for confirmation receiving in mod-quick-marc is exceeded. | |

| Optimistic locking response changed | When mod-quick-marc is getting an optimistic locking error from mod-inventory, it expects that it has JSON-structure. But it was changed to a simple string, this causes an error during message processing. |

- the issue is NOT related to multi-tenant versus single tenant

- the issue is NOT related to how the data is loaded (via data import or directly to storage)

- the issue is NOT related to how the data is updated (via data import, inventory, or MARC authority app UI)

The issue could be related to updating data directly to storage in cases if inventory-storage record ID is not consistent or linked to source-storage record ID

Main problems

- quickMarc update flow is implemented on very similar to the data-import flow but is not updated with latest data-import improvements

- quickMarc update flow uses different from data-import Kafka settings

- async-to-sync approach that is used in quickMarc has no error handling (in any problem the result is 500 error)

Plan

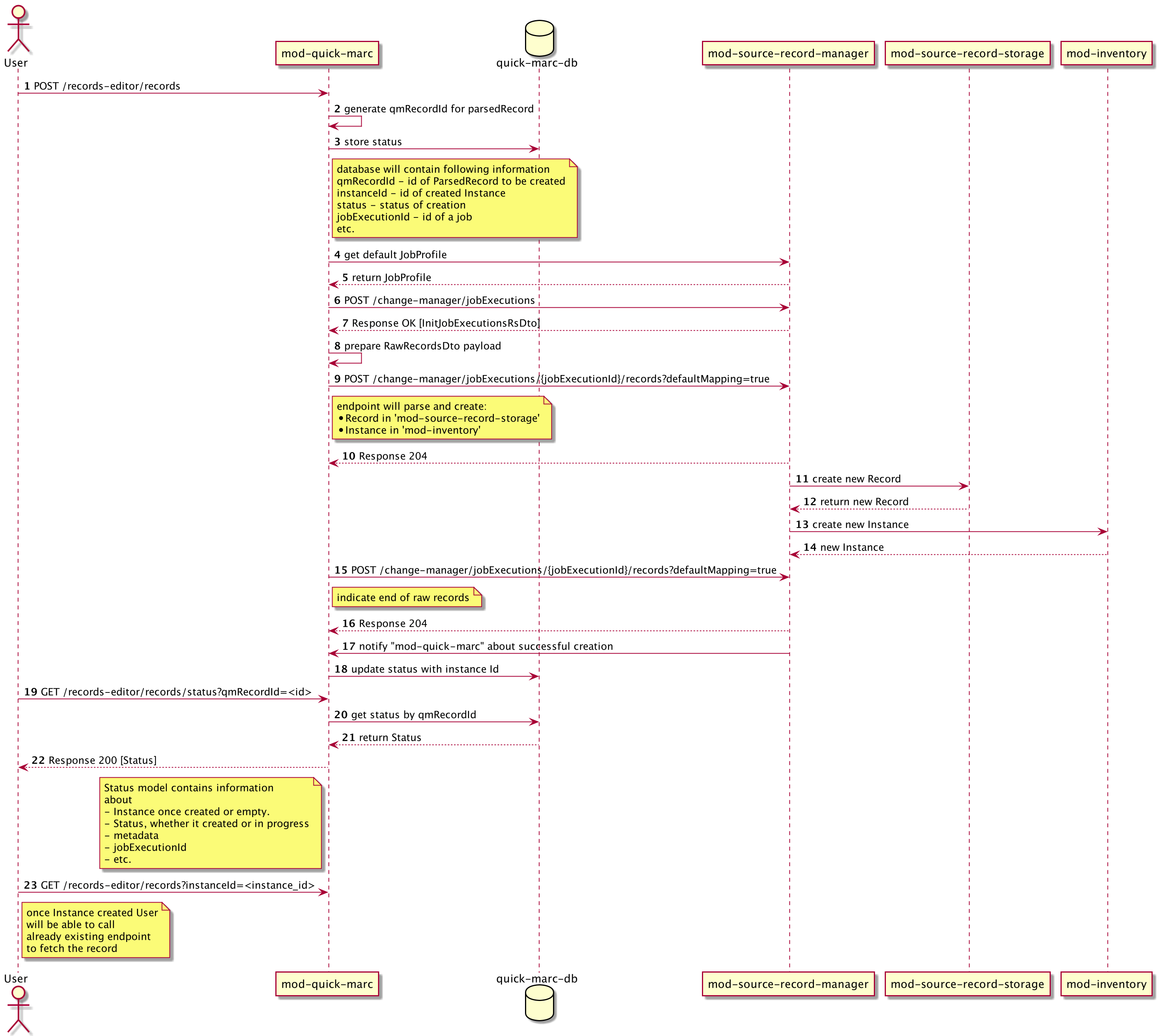

Migrate update flow to data-import

- Create default update profiles for each record type (which could be hidden)

- Use already implemented for derive MARC bib and create MARC holdings code base for data-import job initializing

- Using ReplyingKafkaTemplate instead of the combination of DeferredResult and cache

- Configure template to receive events from DI_COMPLETED and DI_ERROR topics

- Modify data-import payload to always populate correlationId Kafka header if it exists in the initial event.

- Start data-import process record by sending DI_RAW_RECORDS_CHUNK_READ instead of using POST /jobExecutions/{jobExecutionId}/records endpoint

- Specify a timeout for confirmation receiving (1 min ??)

- If the timeout is exceeded then use combination of GET /metadata-provider/jobLogEntries/{jobExecutionId} and GET /metadata-provider/jobLogEntries/{jobExecutionId}/records/{recordId} endpoints to get the status of job and error message if it failed.

Async-to-sync or status ping approach?

async-to-sync

status ping

| Pros | Cons |

|---|---|

| All processes in quickMarc are consistent and easy to maintain | All future bugs in data-import will affect quickMarc also |

| Kafka configuration is inherited from data-import Kafka configuration | A little performance degradation |

| All future improvements to data-import will affect quickMarc also | |

Some features could be easily implemented in the future based on these changes:

|

Prevention plan

- Fix Karate tests for mod-quick-marc to make it possible to rely on them. Possible solution: use mod-srs and mod-inventory-storage endpoints to populate records instead of using data-import.

- Continuously update Karate, module integration, and unit tests each time a new edge-cased bug was founded and fixed.